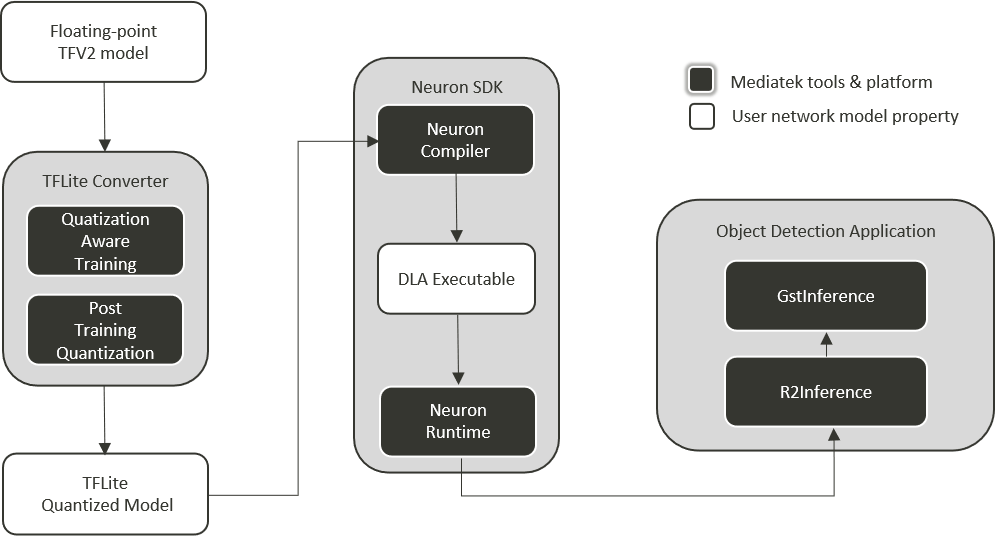

Model Converter

Overview

This page overviews methods for converting YOLOv5s PyTorch models to TensorFlow Lite (TFLite) format. It covers two primary conversion methods: using an open-source converter script and the MTK Converter tool provided by the NeuroPilot SDK.

YOLOv5s Model Conversion

Open Source Converter:

Use the export.py script provided by the open-source community. This script leverages TensorFlow Lite Converter to create a converter object that converts the YOLOv5 model from PyTorch format to TensorFlow Lite format.

Source Link: YOLOv5 GitHub Repository

Note

The methods provided by open source converters may vary depending on the source. The instructions in this documentation focus specifically on the end-to-end flow for converting YOLOv5s models. For other models or converters, additional research and steps might be required.

NeuroPilot Converter tool:

Download the NeuroPilot SDK All-In-One Bundle, install the NeuroPilot Converter tool that matches the user’s Python version, and use the MTK converter to convert the YOLOv5 model from PyTorch to TensorFlow Lite format.

For detailed conversion steps, please refer to the Open Source Converter page and the NeuroPilot Converter tool page.

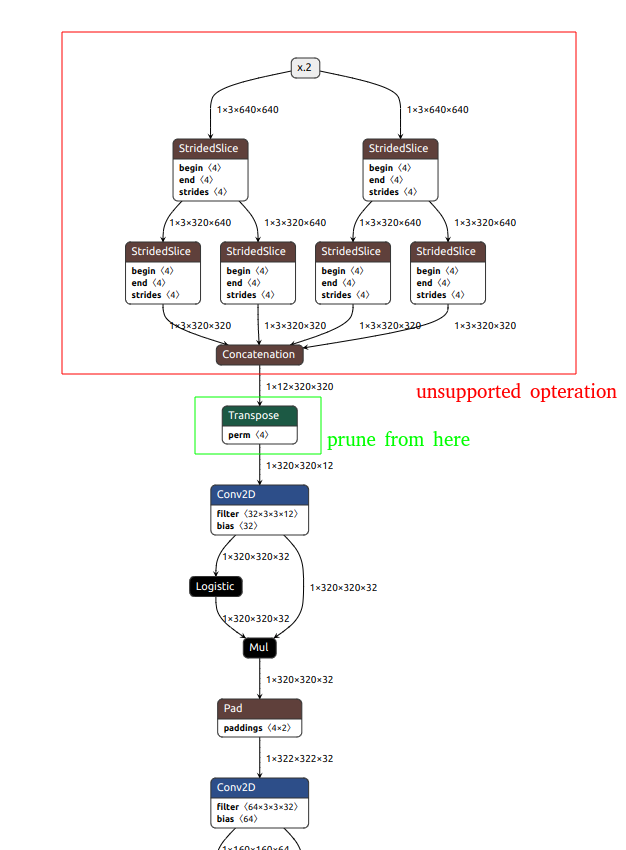

Unsupported Operations in DLA Conversion (Optional)

If you encounter “unsupported operation” errors when converting the TFLite model to DLA format, you may need to prune the model to remove or replace unsupported operations. The following steps demonstrate how to prune a TFLite model for DLA compatibility:

Example Error Message

Below is a detailed example of an “unsupported operation” error that may occur when attempting to convert a TFLite model to DLA format.

ncc-tflite --arch=mdla3.0 yolox_s_quant.tfliteOP[2]: STRIDED_SLICE ├ MDLA: Last stride size should be equal to 1 ├ EDMA: unsupported operation ├ EDMA: unsupported operation OP[3]: STRIDED_SLICE ├ MDLA: Last stride size should be equal to 1 ├ EDMA: unsupported operation ├ EDMA: unsupported operation OP[4]: STRIDED_SLICE ├ MDLA: Last stride size should be equal to 1 ├ EDMA: unsupported operation ├ EDMA: unsupported operation OP[5]: STRIDED_SLICE ├ MDLA: Last stride size should be equal to 1 ├ EDMA: unsupported operation ├ EDMA: unsupported operation ERROR: Cannot find an execution plan because of unsupported operations ERROR: Fail to compile yolox_s_quant.tflite

Note

In this example:

The STRIDED_SLICE operation appears multiple times (e.g., OP[2], OP[3], OP[4], OP[5]), and each occurrence fails due to the same issue with stride size.

For MDLA, the TFLite model didn’t meet the requirement “Last stride size should be equal to 1”, causing the operation to be unsupported in this context.

For EDMA, the STRIDED_SLICE operation is completely unsupported, leading to further execution failures.

As a result, the converter cannot generate a valid execution plan and ultimately fails to compile the model.

To resolve this issue, you need to modify or prune the unsupported operations (such as STRIDED_SLICE) in the model, or adjust the stride size to meet the MDLA’s requirements, this will allow a successful conversion to DLA format.

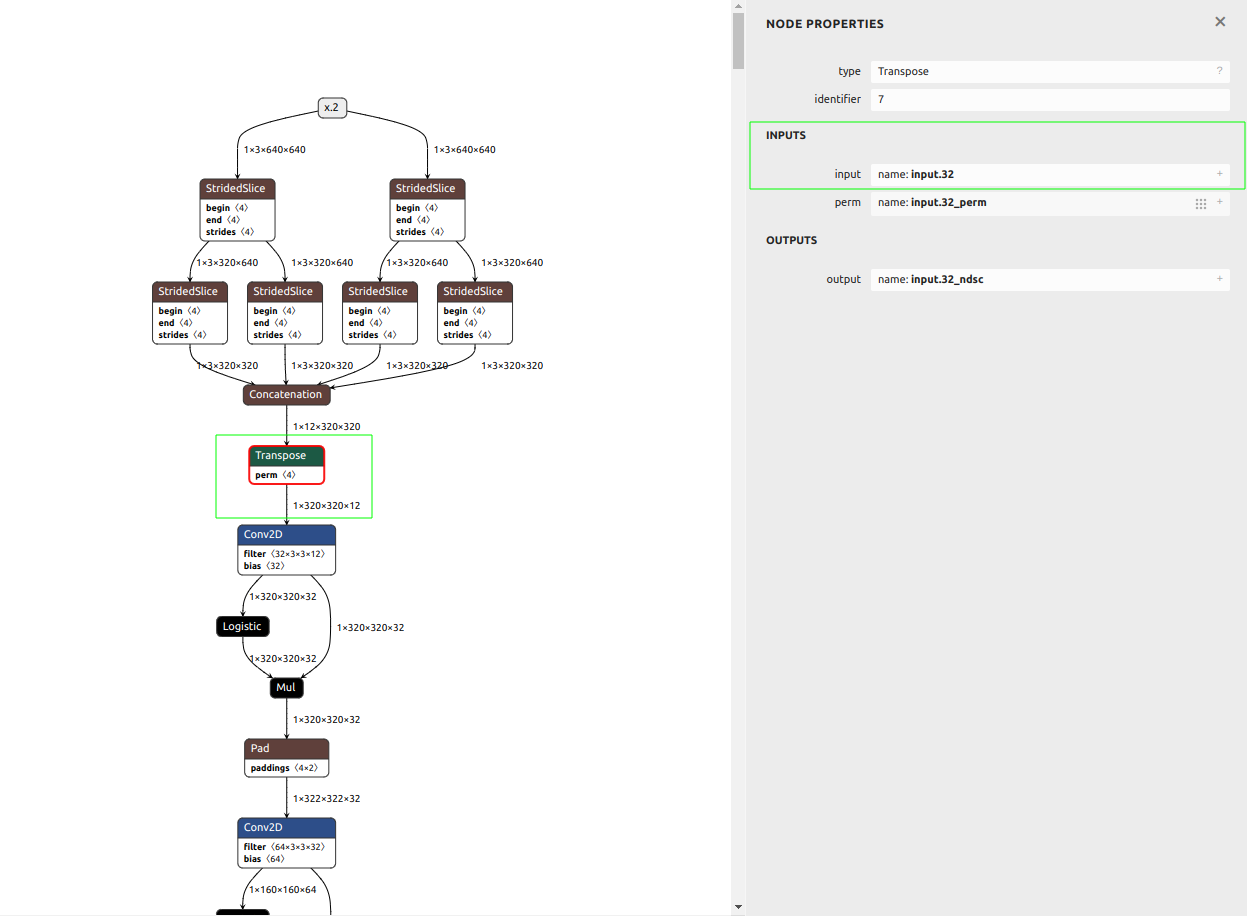

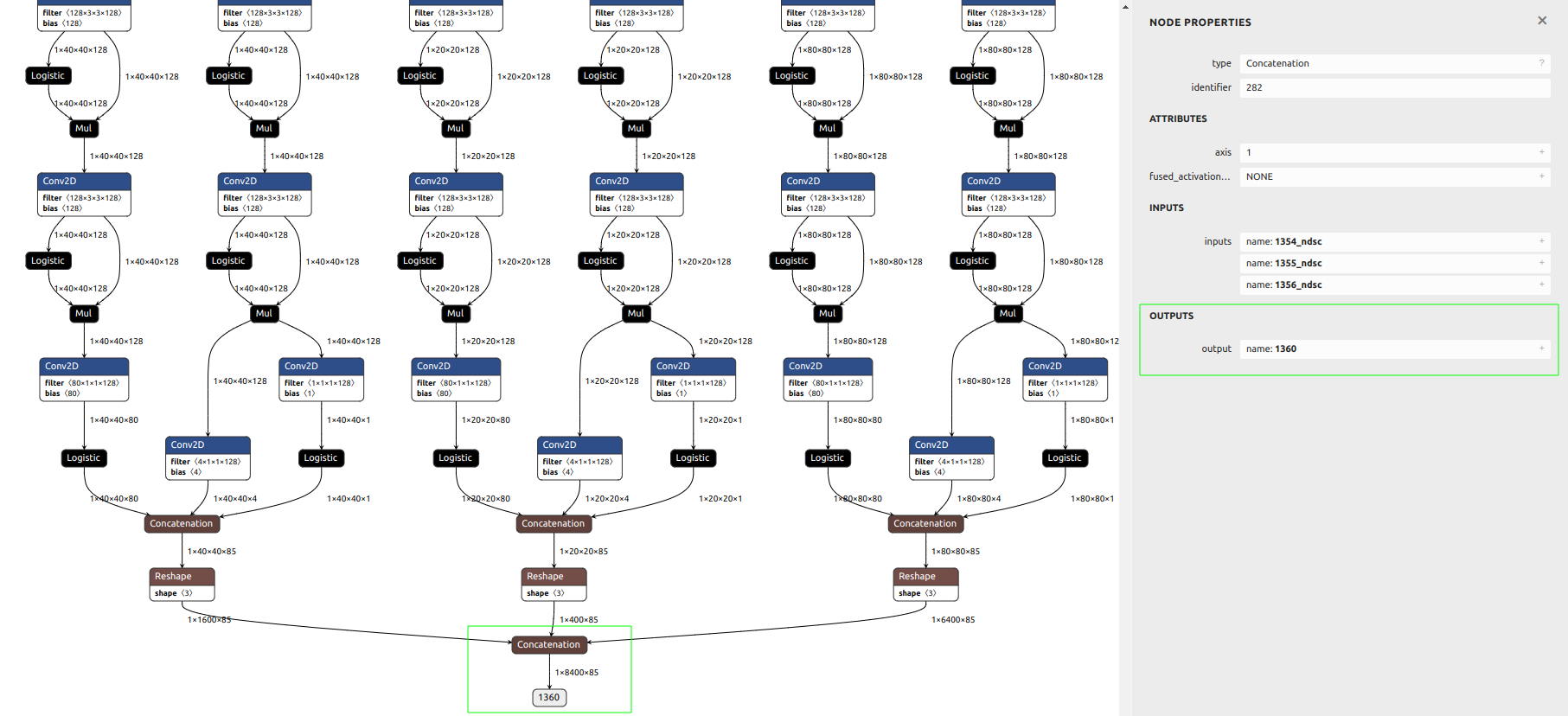

Inspect the model:

Use tools like Netron to inspect the model’s input/output tensors and internal layers. Identify any unsupported operations that need modification.

Prune the model:

Use the TFLiteEditor class from mtk_converter to prune the TFLite model by removing unsupported operations or modifying the model structure to ensure compatibility.

Example script to prune a model:

import mtk_converter editor = mtk_converter.TFLiteEditor("yolox_s_quant.tflite") output_file = "yolox_s_quant_7-303.tflite" input_names = ["input.32"] output_names = ["1360"] _ = editor.export(output_file=output_file, input_names=input_names, output_names=output_names)

Validate the pruned model:

After pruning the model, validate it by running inference and comparing results with the original model to ensure functionality is maintained.

Note

The input and output tensor names (input_names and output_names) used in the script are specific to the example model. You must modify them based on your own model’s structure.