Genio 510/700-EVK

MT8370 System on Chip

Hardware |

MT8370 |

|---|---|

CPU |

2x CA78 2.0GHz, 4x CA55 2.0GHz |

GPU |

ARM G57 MC2 |

NPU |

MDLA3.0 700MHz |

Please refer to the Genio 510 SoC to find detailed specifications.

MT8390 System on Chip

Hardware |

MT8390 |

|---|---|

CPU |

2x CA78 2.2GHz, 6x CA55 2.0GHz |

GPU |

ARM G57 MC3 |

NPU |

MDLA3.0 @900MHz |

Please refer to the Genio 700 SoC to find detailed specifications.

NPU

The MediaTek Neuron Processing Unit (NPU) is a high-performance hardware engine for deep-learning, optimized for bandwidth and power efficiency. The NPU architecture consists of big, small, and tiny cores. This highly heterogeneous design is suited for a wide variety of modern smartphone tasks, such as AI-camera, AI-assistant, and OS or in-app enhancements.

The new NPU 5.0 is cluster AI architecture, offering a huge 3.6 TOPS.

Overview

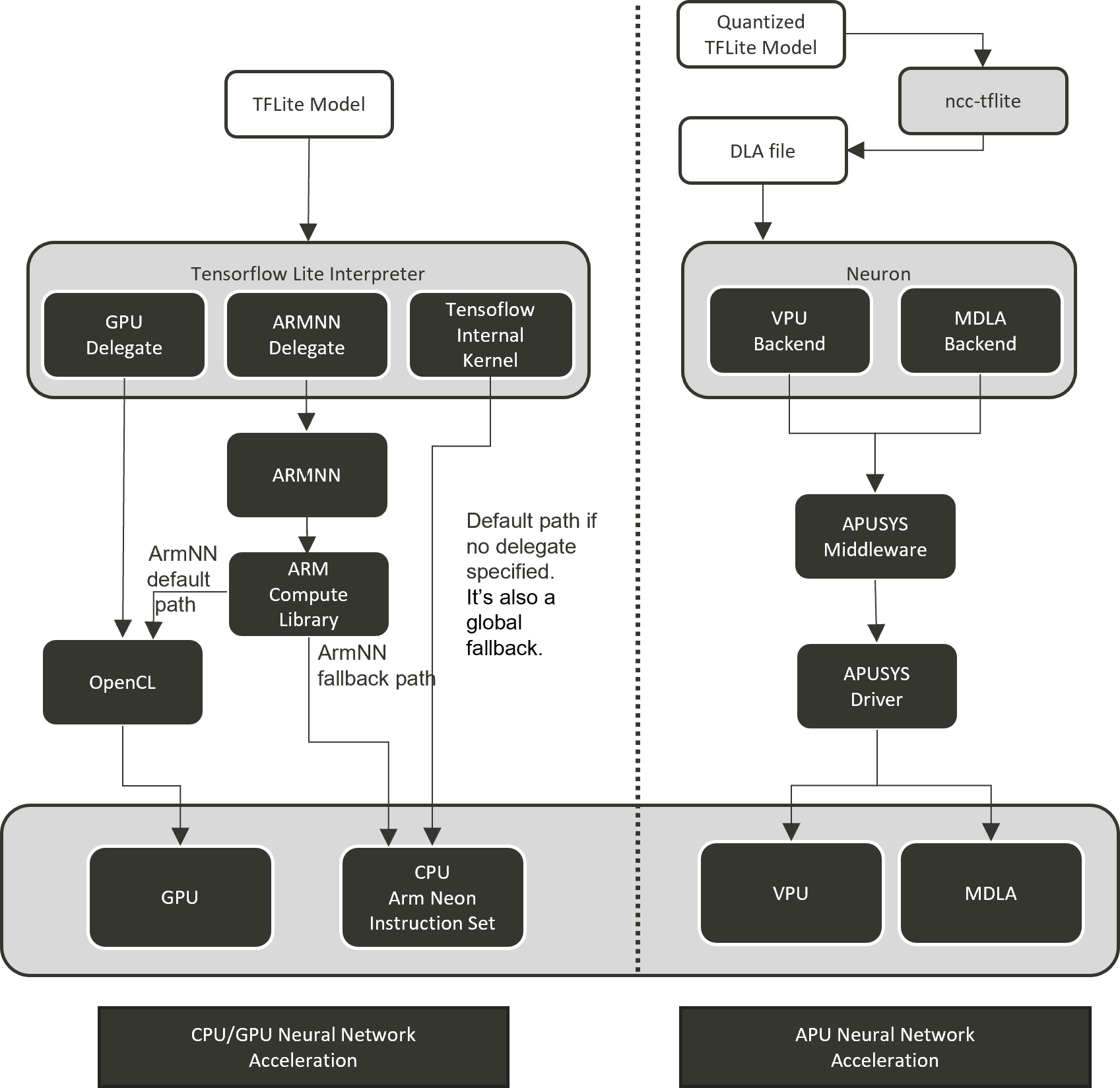

On Genio 700-EVK, we provide different software solutions to boost AI computing by GPU and NPU.

GPU Neural Network Acceleration

We provide tensorflow lite with hardware acceleration to develop and deploy

a wide range of machine learning. By using TensorFlow Lite Delegates,

you can enable hardware acceleration of TFLite models by leveraging on-device accelerators such as the GPU and Digital

Signal Processor (DSP)

IoT Yocto already integrated the TFLite GPU delegate:

GPU delegate: The GPU delegate uses Open GL ES compute shader on the device to inference TFLite model.

NPU Neural Network Acceleration

We introduce the MediaTek-proprietary Machine Learning solution: NeuroPilot on IoT Yocto on Genio 510/700-EVK.

NeuroPilot is a collection of software tools and APIs which are at the center of MediaTek’s AI ecosystem. With NeuroPilot, users can develop and deploy AI applications on edge devices with extremely high efficiency. This makes a wide variety of AI applications run faster, while also keeping data private.

On Genio 510/700-EVK, we support online inference path Neuron Stable Delegate also offline inference path Neuron SDK

which is one of NeuroPilot software collections for NPU acceleration.

For the Neuron Stable Delegate, it’s MTK neuron delegate implemented with the interface provided by TensorflowLite Stable.

Neuron SDK provides a Neuron compiler (ncc-tflite) to convert TFLite models to MediaTek-proprietary

binaries (DLA, Deep Learning Archive) for deployment on MediaTek platforms. The resulting models are highly

efficient, with reduced latency and a smaller memory footprint. Neuron SDK also provides Neuron Run-time API

which provides a set of APIs that users can invoke from within a C/C++ program to create a run-time environment,

parse compiled model file and perform on-device network inference.

Important

Tensorflow official website still mark Stable Delegate as experimental API by the time of Yocto v24.0 Release (6/2024).

In v24.0 release, stable delegate is a demo-only feature. It won’t be officially supported for the function correctness.

Machine learning software stack on Genio 700-EVK

Note

Software information, cmd operations, and test results presented in this chapter are based on the latest version of IoT Yocto (v24.0), Genio 700-EVK.

Tensorflow Lite and Delegates

IoT Yocto integrated the tensorflow lite to provide GPU neural network acceleration.

The software version is as follows:

Component |

Version |

Support Operations |

|---|---|---|

2.17.0 |

Note

If you have to use Tensorflow Lite with XNNPACK, you can set the tflite_with_xnnpack as true in the following file: t/src/meta-nn/recipes-tensorflow/tensorflow-lite/tensorflow-lite_%.bbappend and rebuild Tensorflow Lite package.

CUSTOM_BAZEL_FLAGS += " --define tflite_with_xnnpack=true "

Supported Operations

TFLite 2.10.0 |

Arm NN 23.02 |

abs |

ABS |

add |

ADD |

add_n |

|

arg_max |

ARGMAX |

arg_min |

ARGMIN |

assign_variable |

|

average_pool_2d |

AVERAGE_POOL_2D |

AVERAGE_POOL_3D |

|

basic_lstm |

|

batch_matmul |

BATCH_MATMUL |

batch_to_space_nd |

BATCH_TO_SPACE_ND |

bidirectional_sequence_lstm |

|

broadcast_args |

|

broadcast_to |

|

bucketize |

|

call_once |

|

cast |

CAST |

ceil |

|

complex_abs |

|

concatenation |

CONCATENATION |

control_node |

|

conv_2d |

CONV_2D |

conv_3d |

CONV_3D |

conv_3d_transpose |

|

cos |

|

cumsum |

|

custom |

|

custom_tf |

|

densify |

|

depth_to_space |

DEPTH_TO_SPACE |

depthwise_conv_2d |

DEPTHWISE_CONV_2D |

dequantize |

DEQUANTIZE |

div |

DIV |

dynamic_update_slice |

|

elu |

ELU |

embedding_lookup |

|

equal |

EQUAL |

exp |

EXP |

expand_dims |

EXPAND_DIMS |

external_const |

|

fake_quant |

|

fill |

FILL |

floor |

FLOOR |

floor_div |

FLOOR_DIV |

floor_mod |

|

fully_connected |

FULLY_CONNECTED |

gather |

GATHER |

gather_nd |

GATHER_ND |

gelu |

|

greater |

GREATER |

greater_equal |

GREATER_EQUAL |

hard_swish |

HARD_SWISH |

hashtable |

|

hashtable_find |

|

hashtable_import |

|

hashtable_size |

|

if |

|

imag |

|

l2_normalization |

L2_NORMALIZATION |

L2_POOL_2D |

|

leaky_relu |

|

less |

LESS |

less_equal |

LESS_OR_EQUAL |

local_response_normalization |

LOCAL_RESPONSE_NORMALIZATION |

log |

LOG |

log_softmax |

LOG_SOFTMAX |

logical_and |

LOGICAL_AND |

logical_not |

LOGICAL_NOT |

logical_or |

LOGICAL_OR |

logistic |

LOGISTIC |

lstm |

LSTM |

matrix_diag |

|

matrix_set_diag |

|

max_pool_2d |

MAX_POOL_2D |

MAX_POOL_3D |

|

maximum |

MAXIMUM |

mean |

MEAN |

minimum |

MINIMUM |

mirror_pad |

MIRROR_PAD |

mul |

MUL |

multinomial |

|

neg |

NEG |

no_value |

|

non_max_suppression_v4 |

|

non_max_suppression_v5 |

|

not_equal |

NOT_EQUAL |

NumericVerify |

|

one_hot |

|

pack |

PACK |

pad |

PAD |

padv2 |

|

poly_call |

|

pow |

|

prelu |

PRELU |

pseudo_const |

|

pseudo_qconst |

|

pseudo_sparse_const |

|

pseudo_sparse_qconst |

|

quantize |

QUANTIZE |

random_standard_normal |

|

random_uniform |

|

range |

|

rank |

RANK |

read_variable |

|

real |

|

reduce_all |

|

reduce_any |

|

reduce_max |

REDUCE_MAX |

reduce_min |

REDUCE_MIN |

reduce_prod |

REDUCE_PROD |

relu |

RELU |

relu6 |

RELU6 |

relu_n1_to_1 |

RELU_N1_TO_1 |

reshape |

RESHAPE |

resize_bilinear |

RESIZE_BILINEAR |

resize_nearest_neighbor |

RESIZE_NEAREST_NEIGHBOR |

reverse_sequence |

|

reverse_v2 |

|

rfft2d |

|

round |

|

rsqrt |

RSQRT |

scatter_nd |

|

segment_sum |

|

select |

|

select_v2 |

|

shape |

SHAPE |

sin |

SIN |

slice |

|

softmax |

SOFTMAX |

space_to_batch_nd |

SPACE_TO_BATCH_ND |

space_to_depth |

SPACE_TO_DEPTH |

sparse_to_dense |

|

split |

SPLIT |

split_v |

SPLIT_V |

sqrt |

SQRT |

square |

|

squared_difference |

|

squeeze |

SQUEEZE |

strided_slice |

STRIDED_SLICE |

sub |

SUB |

sum |

SUM |

svdf |

|

tanh |

TANH |

tile |

|

topk_v2 |

|

transpose |

TRANSPOSE |

transpose_conv |

TRANSPOSE_CONV |

unidirectional_sequence_lstm |

UNIDIRECTIONAL_SEQUENCE_LSTM |

unidirectional_sequence_rnn |

|

unique |

|

unpack |

UNPACK |

unsorted_segment_max |

|

unsorted_segment_prod |

|

unsorted_segment_sum |

|

var_handle |

|

where |

|

while |

|

yield |

|

zeros_like |

Demo

A python demo application for image recognition is built in the image that can be found in the

/usr/share/label_image directory. It is adapted from the upstream

label_image.py

cd /usr/share/label_image

ls -l

-rw-r--r-- 1 root root 940650 Mar 9 2018 grace_hopper.bmp

-rw-r--r-- 1 root root 61306 Mar 9 2018 grace_hopper.jpg

-rw-r--r-- 1 root root 10479 Mar 9 2018 imagenet_slim_labels.txt

-rw-r--r-- 1 root root 95746802 Mar 9 2018 inception_v3_2016_08_28_frozen.pb

-rw-r--r-- 1 root root 4388 Mar 9 2018 label_image.py

-rw-r--r-- 1 root root 10484 Mar 9 2018 labels_mobilenet_quant_v1_224.txt

-rw-r--r-- 1 root root 4276352 Mar 9 2018 mobilenet_v1_1.0_224_quant.tflite

Basic commands for running the demo with different delegates are as follows.

Execute on CPU

cd /usr/share/label_image

python3 label_image.py --label_file labels_mobilenet_quant_v1_224.txt --image grace_hopper.jpg --model_file mobilenet_v1_1.0_224_quant.tflite

Execute on GPU, with GPU delegate

cd /usr/share/label_image

python3 label_image.py --label_file labels_mobilenet_quant_v1_224.txt --image grace_hopper.jpg --model_file mobilenet_v1_1.0_224_quant.tflite -e /usr/lib/gpu_external_delegate.so

Note

There is still no Tensorflow official support for python-binding on Stable Delegate by the date of Yocto v25.0 release.

Here we won’t have the Stable Delegate demo with python.

Benchmark Tool

benchmark_model is provided in Tensorflow Performance Measurement for performance evaluation.

Basic commands for running the benchmark tool with CPU and different delegates are as follows.

Execute on CPU (8 threads):

benchmark_model --graph=/usr/share/label_image/mobilenet_v1_1.0_224_quant.tflite --num_threads=8 --num_runs=10

Execute on GPU, with GPU delegate:

benchmark_model --graph=/usr/share/label_image/mobilenet_v1_1.0_224_quant.tflite --use_gpu=1 --gpu_precision_loss_allowed=1 --num_runs=10

Execute on NPU, with Neuron Delegate:

benchmark_model --stable_delegate_settings_file=/usr/share/label_image/stable_delegate_settings.json --use_nnapi=false --use_xnnpack=false --use_gpu=false --min_secs=20 --graph=/usr/share/label_image/mobilenet_v1_1.0_224_quant.tflite

Neuron SDK

On Genio 510/700-EVK, IoT Yocto supports Neuron SDK which is one of MediaTek NeuroPilot software collections.

Neuron SDK provides Neuron compiler (ncc-tflite) to convert TFLite models to MediaTek-proprietary

binaries (DLA, Deep Learning Archive) for deployment on MediaTek platforms. Neuron SDK also provides Neuron Run-time API

which provides a set of APIs that users can invoke from within a C/C++ program to create a run-time environment,

parse compiled model file and perform on-device network inference. Please refer to

Neuron SDK chapter to find all supporting detail.

Supported Operations

Refer to Supported Operations to find all the neural network operations supported by Neuron SDK, and any restrictions placed on their use.

Note

Different compute devices may have restrictions on supported operations. These restrictions are a function of:

Op Type

Op parameters (e.g. kernel dimensions and modifiers, such as stride)

Tensor dimensions (both input and output)

Soc Platform

Numeric format, both data type, and quantization method

Each device will have its guidelines and restrictions.

Demo

A python demo application for image recognition is built into the image

that can be found in the /usr/share/demo_dla directory.

cd /usr/share/demo_dla

ls -l

-rw-r--r-- 1 root root 61306 Mar 9 2018 grace_hopper.jpg

-rw-r--r-- 1 root root 10479 Mar 9 2018 imagenet_slim_labels.txt

-rw-r--r-- 1 root root 1402 Mar 9 2018 label_image.py

-rw-r--r-- 1 root root 4276352 Mar 9 2018 mobilenet_v1_1.0_224_quant.tflite

Use cmd:python3 label_image.py to run the demo. The demo program will convert mobilenet_v1_1.0_224_quant.tflite

into DLA, then inference it on NPU to classify the image: grace_hopper.jpg. Finally, print out the result of

image classification, it should be “military uniform”.

cd /usr/share/demo_dla

python3 label_image.py

/usr/share/demo_dla/mobilenet_v1_1.0_224_quant.dla

/usr/share/demo_dla/grace_hopper.bin

WARNING: dlopen failed: libcmdl_ndk.mtk.vndk.so and libcmdl_ndk.mtk.so not found

WARNING: CmdlLibManager cannot get dlopen handle.

[apusys][info]apusysSession: Session(0xaaaaf9d4eb80): thd(runtime_api_sam) version(3) log(0)

The required size of the input buffer is 150528

The required size of the output buffer is 1001

[apusys][info]run: Cmd v2(0xaaaaf9d737e0): run

[apusys][info]run: Cmd v2(0xaaaaf9d737e0): run done(0)

The top index is 653

The image: military uniform

Benchmark Tool

A python application for benchmarking is built in the image

that can be found in the /usr/share/benchmark_dla directory.

cd /usr/share/benchmark_dla

ls -l

-rw-r--r-- 1 root root 26539112 Mar 9 2018 ResNet50V2_224_1.0_quant.tflite

-rw-r--r-- 1 root root 9020 Mar 9 2018 benchmark.py

-rw-r--r-- 1 root root 23942928 Mar 9 2018 inception_v3_quant.tflite

-rw-r--r-- 1 root root 3577760 Mar 9 2018 mobilenet_v2_1.0_224_quant.tflite

-rw-r--r-- 1 root root 6885840 Mar 9 2018 ssd_mobilenet_v1_coco_quantized.tflite

Use cmd:python3 benchmark.py --auto to run the benchmark. It will find all TFLite models in

/usr/share/benchmark_dla and compile them into DLA, then inference them on NPU. Finally, the

benchmark result will be saved in /usr/share/benchmark_dla/benchmark.log

cd /usr/share/benchmark_dla

# run benchmark to evaluate inference time of each model in the current folder

python3 benchmark.py --auto

# check inference time of each model

cat benchmark.log

[INFO] inception_v3_quant.tflite, mdla3.0, : 7.36

[INFO] inception_v3_quant.tflite, vpu, : 75.57

[INFO] mobilenet_v2_1.0_224_quant.tflite, mdla3.0, : 2.48

[INFO] mobilenet_v2_1.0_224_quant.tflite, vpu, : 18.58

[INFO] ssd_mobilenet_v1_coco_quantized.tflite, mdla3.0, : 3.28

[INFO] ssd_mobilenet_v1_coco_quantized.tflite, vpu, : 24.06

[INFO] ResNet50V2_224_1.0_quant.tflite, mdla3.0, : 7.37

[INFO] ResNet50V2_224_1.0_quant.tflite, vpu, : 61.09

Benchmark Result

The following table are the benchmark results under performance mode

Run model (.tflite) 10 times |

CPU (Thread:6) |

GPU |

ARMNN(GpuAcc) |

ARMNN(CpuAcc) |

Neuron Stable |

APU(MDLA 3.0) |

APU(VPU) |

inception_v3 |

191.437 |

201.637 |

159.532 |

147.306 |

30.31 |

31.03 |

|

inception_v3_quant |

50.539 |

201.95 |

96.468 |

57.731 |

8.77 |

9.03 |

74 |

mobilenet_v2_1.0.224 |

17.838 |

17.315 |

18.876 |

15.901 |

3.74 |

4.03 |

|

mobilenet_v2_1.0.224_quant |

9.242 |

18.295 |

17.48 |

7.694 |

1.32 |

1.03 |

16.84 |

ResNet50V2_224_1.0 |

138.652 |

124.196 |

111.373 |

105.967 |

20.83 |

17.03 |

|

ResNet50V2_224_1.0_quant |

49.844 |

132.14 |

57.396 |

45.084 |

8.07 |

8.03 |

59 |

ssd_mobilenet_v1_coco |

48.701 |

59.195 |

51.912 |

50.589 |

9.53 |

9.82 |

|

ssd_mobilenet_v1_coco_quantized |

16.486 |

96.471 |

34.951 |

54.439 |

2.737 |

3.03 |

22.82 |

Run model (.tflite) 10 times |

CPU (Thread:8) |

GPU |

ARMNN(GpuAcc) |

ARMNN(CpuAcc) |

Neuron Stable |

APU(MDLA 3.0) |

APU(VPU) |

inception_v3 |

156.248 |

143.57 |

116.181 |

132.536 |

22.27 |

22.05 |

|

inception_v3_quant |

42.495 |

144.438 |

71.638 |

55.184 |

6.41 |

6.05 |

74 |

mobilenet_v2_1.0.224 |

14.178 |

14.432 |

15.356 |

14.428 |

2.59 |

1.04 |

|

mobilenet_v2_1.0.224_quant |

9.483 |

14.766 |

13.587 |

7.448 |

0.98 |

2.04 |

16.07 |

ResNet50V2_224_1.0 |

114.532 |

95.004 |

89.524 |

101.784 |

15.02 |

15.04 |

|

ResNet50V2_224_1.0_quant |

43.794 |

96.891 |

44.153 |

42.934 |

5.95 |

6.04 |

59 |

ssd_mobilenet_v1_coco |

37.143 |

44.602 |

36.966 |

50.589 |

6.69 |

6.97 |

|

ssd_mobilenet_v1_coco_quantized |

11.934 |

46.8 |

25.645 |

16.725 |

2.14 |

2.04 |

23 |

Performance Mode

Force CPU, GPU, NPU to run at maximum frequency.

CPU at maximum frequency

Command to set performance mode for CPU governor.

echo performance > /sys/devices/system/cpu/cpufreq/policy0/scaling_governor echo performance > /sys/devices/system/cpu/cpufreq/policy6/scaling_governor

Disable CPU idle

Command to disable CPU idle.

On Genio 510-EVK

for j in 2 1 0; do for i in 5 4 3 2 1 0 ; do echo 1 > /sys/devices/system/cpu/cpu$i/cpuidle/state$j/disable ; done ; doneOn Genio 700-EVK

for j in 2 1 0; do for i in 7 6 5 4 3 2 1 0 ; do echo 1 > /sys/devices/system/cpu/cpu$i/cpuidle/state$j/disable ; done ; doneGPU at maximum frequency

Please refer to Adjust GPU Frequency to fix GPU to run at maximum frequency.

Or you could just set performance mode for GPU governor, and make the GPU statically to the highest frequency.

echo performance > /sys/devices/platform/soc/13000000.gpu/devfreq/13000000.gpu/governorNPU Performance Hints

The NPU operates in performance mode by default, so no adjustments are necessary. To reduce performance, refer to the QoS Tuning Flow and set lower

qos.boostValuevalues.If you suspect a performance issue related to NPUSYS frequency, use the following DebugFS node to force NPUSYS to run at the highest operating points. Then, compare the actual model inference time for any differences:

echo dvfs_debug 0 > /sys/kernel/debug/apusys/powerWarning

Note that this is a debug feature and should not be used in production images. As a general guideline, kernel DebugFS should be disabled in production environments.

Disable thermal

echo 115000 > /sys/class/thermal/thermal_zone0/trip_point_0_temp echo 115000 > /sys/class/thermal/thermal_zone0/trip_point_1_temp echo 115000 > /sys/class/thermal/thermal_zone0/trip_point_2_temp