Accuracy Comparison

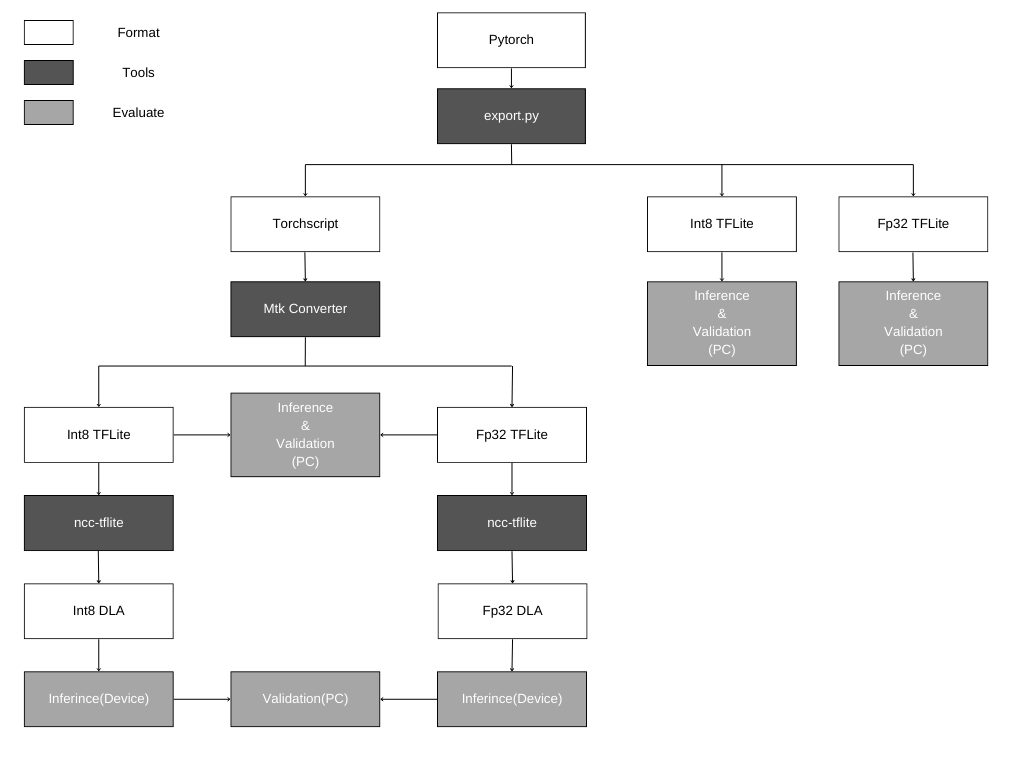

Overview

This page provides a comprehensive comparison of the accuracy of YOLOv5s models across various formats and conversion processes. It includes:

Validation metrics for the original PyTorch model and its TFLite versions with different quantizations.

Evaluation results after converting the TFLite models using the MTK Converter Tool.

Performance metrics for models evaluated on DLA devices.

Section

- Source Model (on PC)

Pytorch Model Validation

INT8 TFLite Model Validation (Source)

FP32 TFLite Model Validation (Source)

- TFLite Model After MTK Converter Tool (on PC)

INT8 TFLite Model Validation (MTK)

FP32 TFLite Model Validation (MTK)

- DLA Model Evaluation (On Devices)

INT8 DLA Model Validation (MTK)

FP32 DLA Model Validation (MTK)

Accuracy Comparison

Model Validation Type |

Pytorch Model (PC) |

Int8 TFLite (Source, PC) |

FP32 TFLite (Source, PC) |

Int8 TFLite (MTK, PC) |

FP32 TFLite (MTK, PC) |

Int8 DLA (MTK, Device) |

FP32 DLA (MTK, Device) |

P (Precision) |

0.709 |

0.723 |

0.669 |

0.666 |

0.669 |

0.633 |

0.667 |

R (Recall) |

0.634 |

0.583 |

0.661 |

0.632 |

0.661 |

0.652 |

0.661 |

0.713 |

0.675 |

0.712 |

0.799 |

0.699 |

0.698 |

0.712 |

|

0.475 |

0.416 |

0.472 |

0.458 |

0.458 |

0.459 |

0.472 |

End-to-End Conversion Flow and Accuracy Validation

This section provides detailed steps and results for verifying the accuracy of YOLOv5s models in different formats and after various conversion processes.

Source Model (on PC)

Pytorch Model Validation:

Clone The Repository:

git clone http://github.com/ultralytics/yolov5 cd yolov5 git reset --hard 485da42 pip install -r requirements.txt

Validate Model:

python val.py --weights yolov5s.pt --data coco128.yaml --img 640

Note

Description of the parameters used in the above command:

–weights: Specifies the model weight file to load.

–data: Specifies the data configuration file.

–img: Specifies the input image size.

Result:

Metric

Value

P (Precision)

0.709

R (Recall)

0.634

mAP@50 (Mean Average Precision at IoU=0.50)

0.713

mAP@50-95 (Mean Average Precision at IoU=0.50:0.95)

0.475

Note

Description of the metrics in the result table:

P (Precision): The precision of the model, indicating the percentage of true positive predictions among all positive predictions.

R (Recall): The recall of the model, indicating the percentage of true positive predictions among all actual positives.

mAP@50 (Mean Average Precision at IoU=0.50): The mean average precision calculated at an Intersection over Union (IoU) threshold of 0.50.

mAP@50-95 (Mean Average Precision at IoU=0.50:0.95): The mean average precision calculated over multiple IoU thresholds ranging from 0.50 to 0.95.

INT8 TFLite Model Validation (Source):

Export the model to TFlite with INT8 quantization:

python export.py --weights yolov5s.pt --include tflite --int8

Note

Description of the parameters used in the export command:

–weights: Specifies the model weight file to be exported.

–include tflite: Specifies that the model should be exported to TensorFlow Lite format.

–int8: Indicates that INT8 quantization should be applied to the model, which reduces the model size and increases inference speed while maintaining accuracy.

Validate Model:

python val.py --weights yolov5s-int8.tflite --data coco128.yaml --img 640

Result:

Metric

Value

P (Precision)

0.723

R (Recall)

0.583

mAP@50 (Mean Average Precision at IoU=0.50)

0.675

mAP@50-95 (Mean Average Precision at IoU=0.50:0.95)

0.416

FP32 TFLite Model Validation (Source):

Export the model to TFlite with FP32 precision:

git apply export_fp32.patch python export.py --weights yolov5s.pt --include tflite

Note

This patch modifies the export script to support exporting the model in FP32 (32-bit float) TFLite format instead of FP16 (16-bit float). The changes include:

Changing the output filename to indicate FP32 format.

Updating the supported types to use tf.float32 for higher precision.

Validate Model:

python val.py --weights yolov5s-fp32.tflite --data coco128.yaml --img 640

Result:

Metric

Value

P (Precision)

0.669

R (Recall)

0.661

mAP@50 (Mean Average Precision at IoU=0.50)

0.712

mAP@50-95 (Mean Average Precision at IoU=0.50:0.95)

0.472

TFLite Model After MTK Converter Tool (on PC)

INT8 TFLite Model Validation (MTK):

Before proceeding with INT8 TFLite model validation, ensure that the NeuroPilot Converter Tool is installed.

Export and convert the model using the following commands:

git apply Fix_yolov5_mtk_tflite_issue.patch python export.py --weights yolov5s.pt --img-size 640 640 --include torchscript python prepare_calibration_dataset.py python convert_to_quant_tflite.py

If you encounter the following error while running the above commands:

RuntimeError: `PyTorchConverter` only supports 2.0.0 > torch >= 1.3.0. Detected an installation of version v2.4.0+cu121. Please install the supported version.

Please install the compatible PyTorch version using the following command:

pip3 install torch==1.9.0 torchvision==0.10.0

Note

For more details on the scripts used, refer to the following:

prepare_calibration_dataset.py: View the script content and learn how to prepare the calibration dataset.

convert_to_quant_tflite.py: View the script content and understand the conversion process to INT8 TFLite.

Validate The MTK INT8 TFLite Model:

python val.py --weights yolov5s_int8_mtk.tflite --data coco128.yaml --img 640

Result:

Metric

Value

P (Precision)

0.666

R (Recall)

0.632

mAP@50 (Mean Average Precision at IoU=0.50)

0.799

mAP@50-95 (Mean Average Precision at IoU=0.50:0.95)

0.458

FP32 TFLite Model Validation (MTK):

Export and convert the model:

python export.py --weights yolov5s.pt --img-size 640 640 --include torchscript python convert_to_tflite.py

Note

For more details on the scripts used, refer to the following:

convert_to_tflite.py: View the script content and understand the conversion process to FP32 TFLite.

Validate the MTK FP32 TFLite model:

python val.py --weights yolov5s_mtk.tflite --data coco128.yaml --img 640

Result:

Metric

Value

P (Precision)

0.669

R (Recall)

0.661

mAP@50 (Mean Average Precision at IoU=0.50)

0.699

mAP@50-95 (Mean Average Precision at IoU=0.50:0.95)

0.458

prepare_calibration_dataset.py:

import os

import numpy as np

from utils.dataloaders import LoadImagesAndLabels

from utils.general import check_dataset

data = 'data/coco128.yaml'

num_batches = 100

calib_dir = 'calibration_dataset'

os.makedirs(calib_dir)

# Retrieve first 100 images from training set with batch_size = 1

dataset = LoadImagesAndLabels(check_dataset(data)['train'], batch_size=1)

for idx, (im, _target, _path, _shape) in enumerate(dataset):

if idx >= num_batches:

break

# Expand shape from (3, 640, 640) to (1, 3, 640, 640)

im = np.expand_dims(im, axis=0).astype(np.float32)

# 0 - 255 to 0.0 - 1.0

im /= 255

np.save(os.path.join(calib_dir, 'batch-{:05d}.npy'.format(idx)), im)

convert_to_quant_tflite.py

import os

import numpy as np

import mtk_converter

calib_dir = 'calibration_dataset'

converter = mtk_converter.PyTorchConverter.from_script_module_file(

'yolov5s.torchscript', input_shapes=[(1, 3, 640, 640)]

)

def data_gen():

"""Return an iterator for the calibration dataset."""

for fn in sorted(os.listdir(calib_dir)):

yield [np.load(os.path.join(calib_dir, fn))]

converter.quantize = True

converter.calibration_data_gen = data_gen

converter.convert_to_tflite('yolov5s_int8_mtk.tflite')

convert_to_tflite.py

import mtk_converter

converter = mtk_converter.PyTorchConverter.from_script_module_file(

'yolov5s.torchscript', input_shapes=[(1, 3, 640, 640)]

)

converter.convert_to_tflite('yolov5s_mtk.tflite')

DLA Model Evaluation (On Devices)

NeuroPilot SDK tools Download:

Download NeuroPilot SDK All-In-One Bundle:

Visit the download page: NeuroPilot Downloads

Extract the Bundle:

tar zxvf neuropilot-sdk-basic-6.0.5-build20240103.tar.gz

Setting Environment Variables:

export LD_LIBRARY_PATH=/xxx/neuropilot-sdk-basic-6.0.5-build20240205/neuron_sdk/host/lib

INT8 DLA Model Validation (MTK):

INT8 TFLite Model convert to DLA format:

/path/to/neuropilot-sdk-basic-6.0.5-build20240103/neuron_sdk/host/bin/ncc-tflite --arch=mdla3.0 yolov5s_int8_mtk.tflite

Prepare and push the files to the device:

python prepare_evaluation_dataset.py adb shell mkdir /tmp/yolov5 adb shell mkdir /tmp/yolov5/device_outputs adb push yolov5s_int8_mtk.dla /tmp/yolov5 adb push evaluation_dataset /tmp/yolov5 adb push run_device.sh /tmp/yolov5

Note

For more details on the scripts used, refer to the following:

prepare_evaluation_dataset.py: View the script content and learn how to prepare the evaluation dataset.

run_device.sh: View the script content and understand how to use script to perform inference tasks on the devices.

Run the evaluation on the device:

cd /tmp/yolov5 sh run_device.sh

Pull the results and validate:

adb pull /tmp/yolov5/device_outputs . python val_int8_inference.py --weights yolov5s_int8_mtk.tflite

Result:

Metric

Value

P (Precision)

0.633

R (Recall)

0.652

mAP@50 (Mean Average Precision at IoU=0.50)

0.698

mAP@50-95 (Mean Average Precision at IoU=0.50:0.95)

0.459

prepare_evaluation_dataset.py:

import os

import numpy as np

from utils.dataloaders import LoadImagesAndLabels

from utils.general import check_dataset

import mtk_converter

# Create TFLite parser

parser = mtk_converter.TFLiteParser('yolov5s_int8_mtk.tflite')

# Get the first input details since the model has only one input

input_details = parser.get_input_tensor_details()[0]

# The input tensor is per-tensor quantized so we get the only scale and zero_point

scale = input_details['quantization']['scales'][0]

zero_point = input_details['quantization']['zero_points'][0]

data = 'data/coco128.yaml'

eval_dir = 'evaluation_dataset'

os.makedirs(eval_dir)

# Save the evaluation dataset as binary file

dataset = LoadImagesAndLabels(check_dataset(data)['val'], batch_size=1)

for idx, (im, _target, _path, _shape) in enumerate(dataset):

# Expand shape from (3, 640, 640) to (1, 3, 640, 640)

im = np.expand_dims(im, axis=0).astype(np.float32)

# 0 - 255 to 0.0 - 1.0

im /= 255

# Quantize to INT8

im = np.clip(np.round(im / scale) + zero_point, -128, 127).astype(np.int8)

# Save as binary file

im.tofile(os.path.join(eval_dir, 'batch-{:05d}.bin'.format(idx)))

run_device.sh:

#!/bin/sh

input_dir="evaluation_dataset"

output_dir="device_outputs"

# Run inference and save the three outputs to output_dir

run_eval() {

echo Running "$1"

out0="$output_dir/$(basename "$1" .bin)_0.bin"

out1="$output_dir/$(basename "$1" .bin)_1.bin"

out2="$output_dir/$(basename "$1" .bin)_2.bin"

/usr/sbin/neuronrt -m hw -a yolov5s_int8_mtk.dla -i "$1" -o "$out0" -o "$out1" -o "$out2"

}

# Loop through all input data in evaluation dataset

for input_bin in "$input_dir"/*; do

if [ -f "$input_bin" ]; then

run_eval "$input_bin"

fi

done

FP32 DLA Model Validation (MTK):

INT8 TFLite Model convert to DLA format:

/path/to/neuropilot-sdk-basic-6.0.5-build20240103/neuron_sdk/host/bin/ncc-tflite --arch=mdla3.0 --relax-fp32 yolov5s_mtk.tflite

Prepare and push the files to the device:

python prepare_evaluation_dataset_fp32.py adb shell mkdir /tmp/yolov5 adb shell mkdir /tmp/yolov5/device_outputs_fp32 adb push yolov5s_mtk.dla /tmp/yolov5 adb push evaluation_dataset_fp32 /tmp/yolov5 adb push run_device_for_fp32.sh /tmp/yolov5

Note

For more details on the scripts used, refer to the following:

prepare_evaluation_dataset_fp32.py: View the script content and learn how to prepare the fp32 evaluation dataset.

run_device_for_fp32.sh: View the script content and understand how to use script to perform inference tasks on the devices.

Run the evaluation on the device:

cd /tmp/yolov5 sh run_device_for_fp32.sh

Pull the results and validate:

adb pull /tmp/yolov5/device_outputs_fp32 . python val_fp32_inference.py --weights yolov5s_mtk.tflite

Result:

Metric

Value

P (Precision)

0.667

R (Recall)

0.661

mAP@50 (Mean Average Precision at IoU=0.50)

0.712

mAP@50-95 (Mean Average Precision at IoU=0.50:0.95)

0.472

prepare_evaluation_dataset_fp32.py:

import os

import numpy as np

from utils.dataloaders import LoadImagesAndLabels

from utils.general import check_dataset

import mtk_converter

# Create TFLite parser

parser = mtk_converter.TFLiteParser('yolov5s_mtk.tflite')

# Get the first input details since the model has only one input

input_details = parser.get_input_tensor_details()[0]

data = 'data/coco128.yaml'

eval_dir = 'evaluation_dataset_fp32'

os.makedirs(eval_dir)

# Save the evaluation dataset as binary file

dataset = LoadImagesAndLabels(check_dataset(data)['val'], batch_size=1)

for idx, (im, _target, _path, _shape) in enumerate(dataset):

# Expand shape from (3, 640, 640) to (1, 3, 640, 640)

im = np.expand_dims(im, axis=0).astype(np.float32)

# 0 - 255 to 0.0 - 1.0

im /= 255

# Save as binary file

im.tofile(os.path.join(eval_dir, 'batch-{:05d}.bin'.format(idx)))

run_device_for_fp32.sh:

#!/bin/sh

input_dir="evaluation_dataset_fp32"

output_dir="device_outputs_fp32"

# Run inference and save the three outputs to output_dir

run_eval() {

echo Running "$1"

out0="$output_dir/$(basename "$1" .bin)_0.bin"

out1="$output_dir/$(basename "$1" .bin)_1.bin"

out2="$output_dir/$(basename "$1" .bin)_2.bin"

/usr/sbin/neuronrt -m hw -a yolov5s_mtk.dla -i "$1" -o "$out0" -o "$out1" -o "$out2"

}

# Loop through all input data in evaluation dataset

for input_bin in "$input_dir"/*; do

if [ -f "$input_bin" ]; then

run_eval "$input_bin"

fi

done