GstInference (EOL)

Overview

GstInference is an open-source project from Ridgerun Engineering that provides a framework for integrating deep learning inference into GStreamer. Either use one of the included elements to do out-of-the-box inference using the most popular deep learning architectures or leverage the base classes and utilities to support your custom architecture.

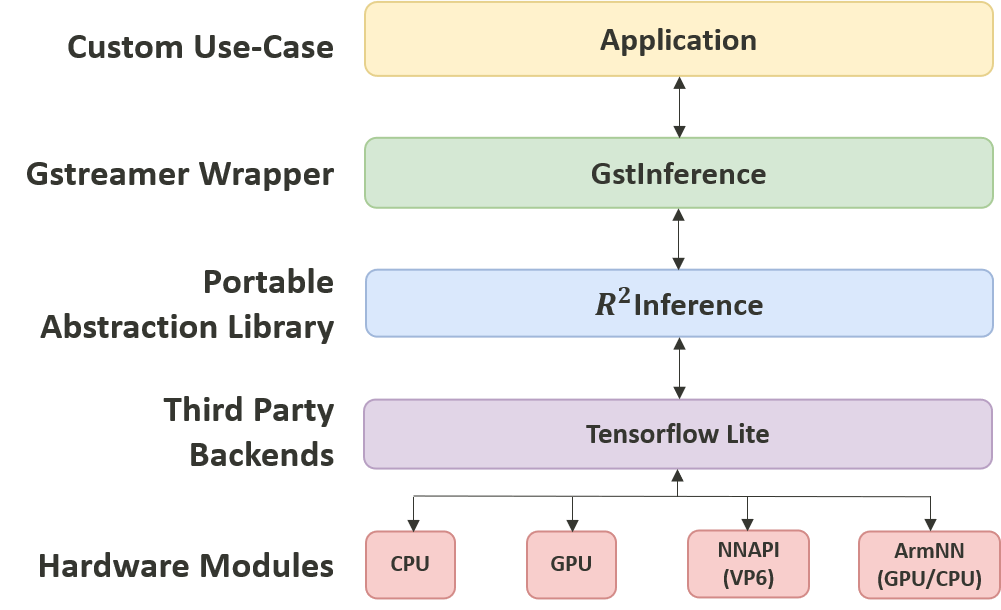

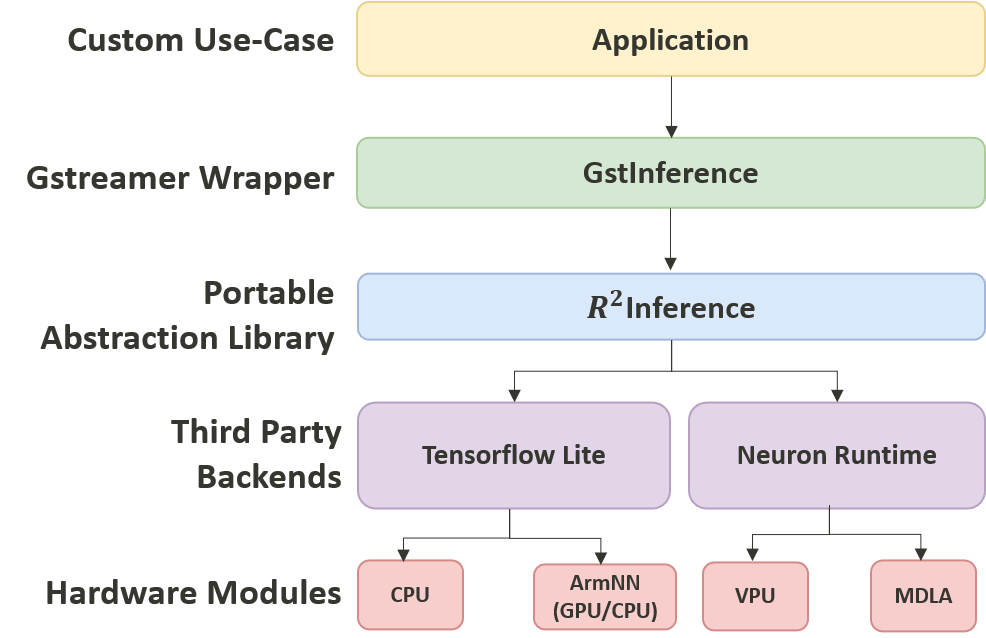

This repo uses R²Inference, an abstraction layer in C/C++ for a variety of machine learning frameworks. With R²Inference a single C/C++ application may work with models on different frameworks. This is useful to execute inference taking advantage of different hardware resources such as CPU, GPU, or AI optimized accelerators.

On Genio 350-EVK, we provide TensorFlow lite with different hardware resources to develop a variety of machine learning applications.

GstInference Software Stack on Genio 350-EVK

On Genio 1200-EVK, user can inference model through online-compiled path Tensordlow lite or offline-compiled path Neuron.

GstInference Software Stack on Genio 1200-EVK

For more details on each platform, please refer to Machine Learning Developer Guide.

The following sections will describe how to get GstInference running on your platform, and show the performance statistics for a different combination of camera source and hardware resource.