Yocto

The Genio Yocto Project provides a flexible environment for evaluating AI workloads using open-source frameworks. This section focuses on benchmarking tools and demonstration applications specifically designed for Yocto-based Genio platforms.

While this page highlights Yocto-specific resources, the core development tools, host-side SDKs, and foundational concepts are common across all Genio operating systems.

For detailed information on obtaining SDKs and host tools, refer to AI Development Resources.

To understand the end-to-end AI deployment process, see Get Started With AI Tools.

Software Architecture

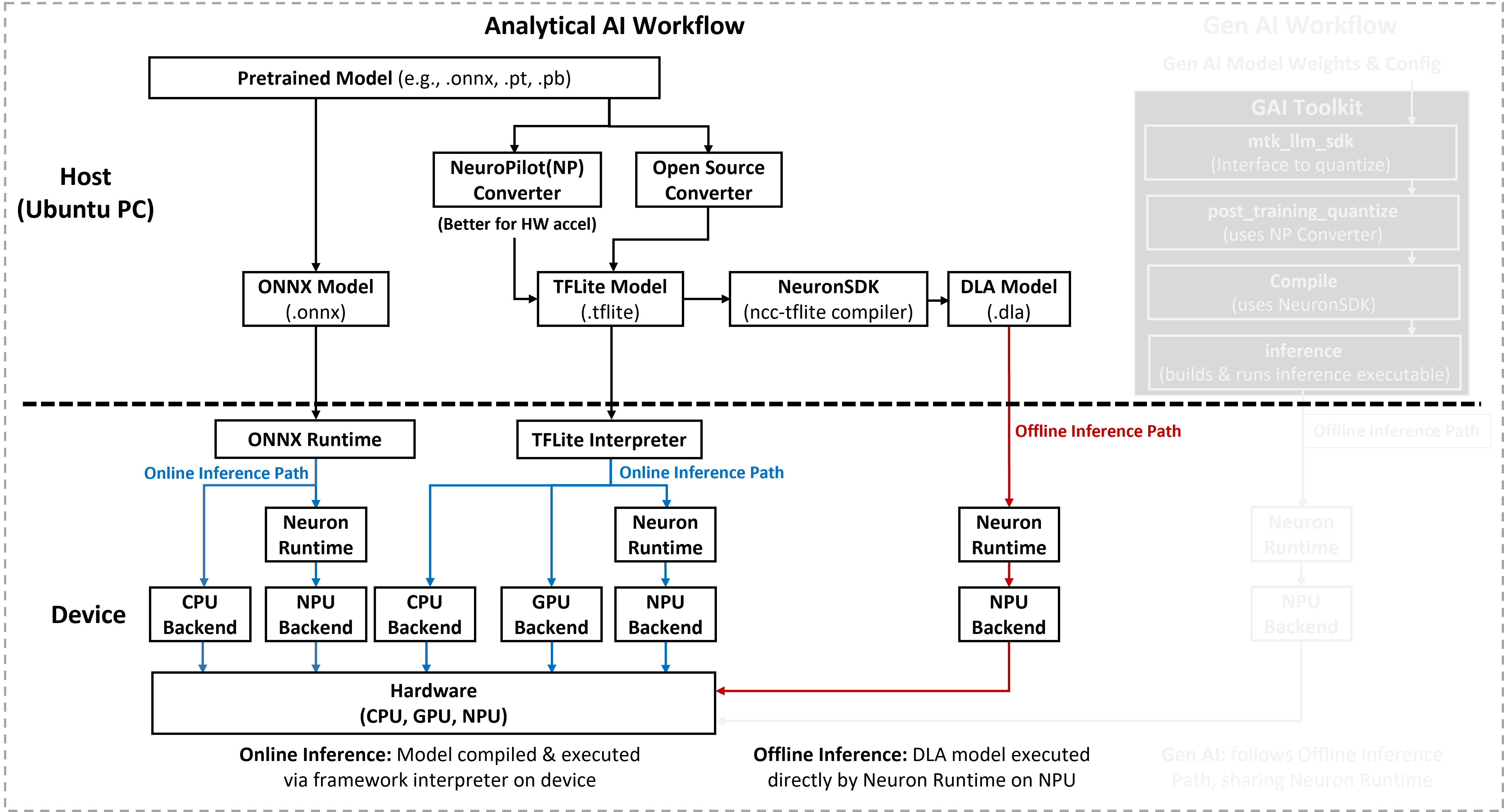

Genio Yocto images integrate two primary open-source inference frameworks: TFLite and ONNX Runtime. These frameworks are built from upstream open-source projects and pre-packaged in the Board Support Package (BSP) to enable immediate evaluation.

The following figure illustrates the Yocto-specific AI software stack, focusing on the analytical AI paths.

Supporting Scope on Yocto

The available AI software stack depends on the hardware capabilities of each Genio platform. The following table summarizes the support for TFLite (Online and Offline) and ONNX Runtime on Yocto.

Platform |

TFLite - Analytical AI (Online) |

TFLite - Analytical AI (Offline) |

TFLite - Generative AI |

ONNX Runtime - Analytical AI |

Genio 520/720 |

CPU + GPU + NPU |

NPU |

X (ETA: 2026/Q2) |

CPU + NPU |

Genio 510/700 |

CPU + GPU + NPU |

NPU |

X |

CPU |

Genio 1200 |

CPU + GPU + NPU |

NPU |

X |

CPU |

Genio 350 |

CPU + GPU |

X |

X |

CPU |

Note

Currently, Genio Yocto images do not support Generative AI (GAI) workloads. MediaTek plans to introduce GAI support for the Yocto Project in 2026 Q1. Developers requiring GAI capabilities should currently refer to the Android platform resources.