IoT AI Hub Overview

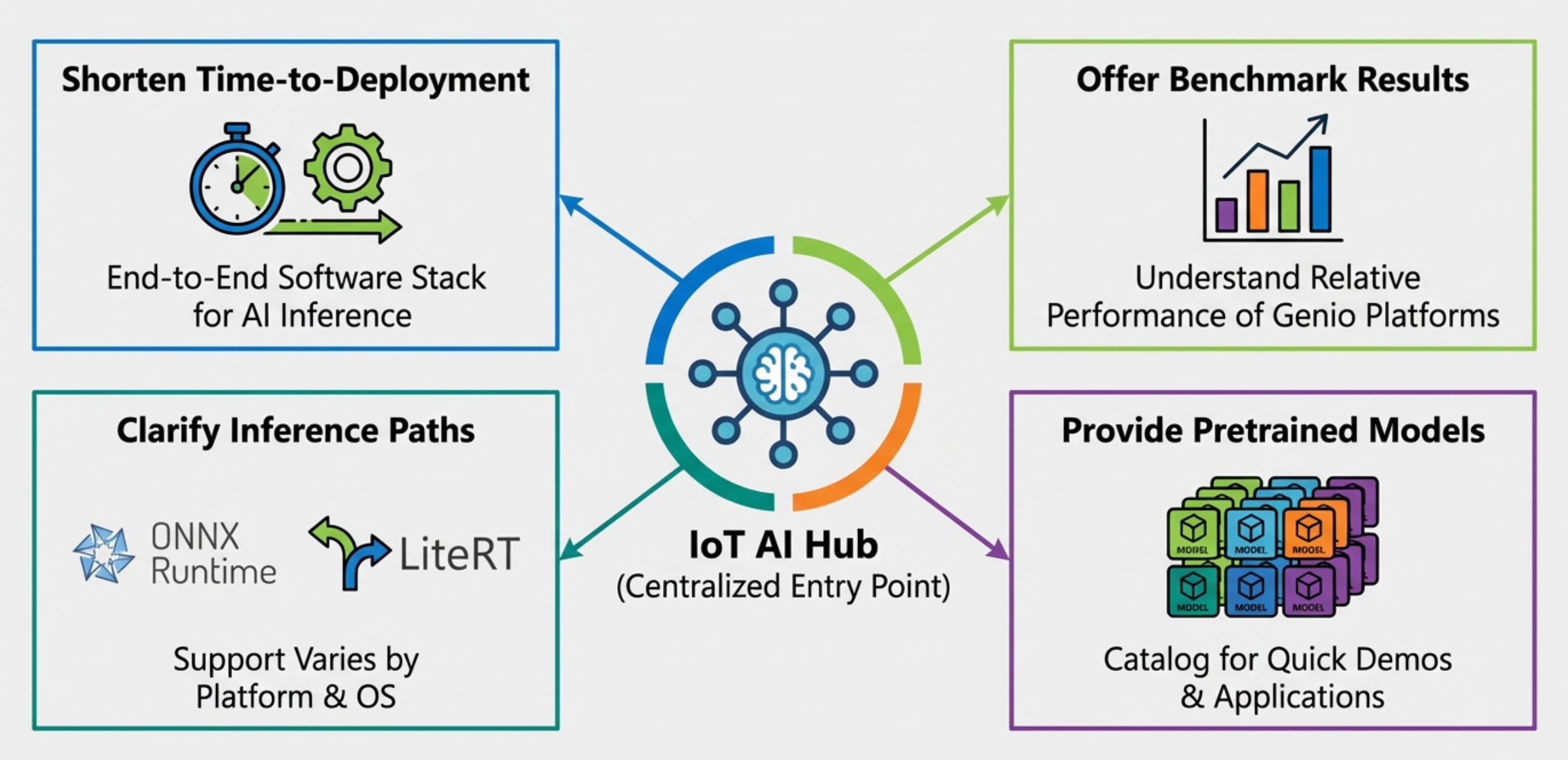

The IoT AI Hub is a centralized entry point for evaluating, developing, and deploying AI workloads on MediaTek Genio platforms.

The AI hub has four primary objectives:

Shorten time-to-deployment by providing an end-to-end software stack for AI inference on IoT devices.

Offer benchmark results that help developers understand the relative performance of different Genio platforms and configurations.

Clarify the supported AI inference paths, including TFLite(LiteRT) and ONNX Runtime, and show how support varies by platform and operating system.

Provide a catalog of pretrained models that can be used as-is, so developers can bring up demos and applications quickly.

The following sections describe the supported SoCs, software architecture, inference paths, and related ecosystem resources that are available through the IoT AI Hub.

Note

TFLite and LiteRT: LiteRT is the rebranded name for TensorFlow Lite, Google’s high-performance runtime for on-device AI. In this documentation, we primarily use TFLite to refer to this ecosystem.

AI Supporting Scope

The following table describes the supported combinations across SoCs, Operating Systems, and Inference Frameworks for the Genio platforms.

Platform |

OS |

TFLite - Analytical AI (Online) |

TFLite - Analytical AI (Offline) |

TFLite - Generative AI |

ONNX Runtime - Analytical AI |

Genio 520/720 |

Android |

O |

O |

O |

O (By Request) |

Yocto |

O |

O |

X (ETA: 2026/Q2) |

O |

|

Genio 510/700 |

Android |

O |

O |

X |

X |

Yocto |

O |

O |

X |

O |

|

Ubuntu |

O |

O |

X |

X |

|

Genio 1200 |

Android |

O |

O |

X |

X |

Yocto |

O |

O |

X |

O |

|

Ubuntu |

O |

O |

X |

X |

|

Genio 350 |

Android |

O |

X |

X |

X |

Yocto |

O |

X |

X |

O |

|

Ubuntu |

O |

X |

X |

X |

User Roles and Access Levels

The effective AI support for each operating system also depends on the type of customer account. Here summarizes which user roles can access LiteRT and ONNX on Android, IoT Yocto, and Ubuntu, and indicates configurations that are still under planning.

Framework / Inference Path |

Android |

IoT Yocto |

Ubuntu |

|---|---|---|---|

LiteRT (Analytical AI) |

Direct Customer |

Developer |

Developer |

LiteRT (Generative AI) |

Direct Customer |

Direct Customer (target: 2026/Q2) |

Under Planning |

ONNX (Analytical AI) |

By Request |

Developer |

Under Planning |

The following definitions apply:

Direct Customer: Customers with a MediaTek Online (MOL) account, who can access the NeuroPilot Portal to read online documentation and download NeuroPilot SDKs:

Developer: Customers with a MediaTek Developer account, who can access Genio Developer Center resources:

By Request: Access is not generally available for self-service download. Customers must contact their MediaTek representative to request enablement.

Under Planning: Framework support is planned but not yet available for production use. The actual schedule may vary; customers should refer to the latest release notes or contact their MediaTek representative for updates.

Hardware Accelerator

Here lists the NPU hardware difference on different platforms. Please find the detailed specifications on the following table for the other components of the board.

Accelerator |

Genio 520 |

Genio 720 |

Genio 510 |

Genio 700 |

Genio 1200 |

Genio 350 |

GPU |

Arm Mali-G57 MC2 |

Arm Mali-G57 MC2 |

Arm Mali-G57 MC2 |

Arm Mali-G57 MC3 |

Arm Mali-G57 MC5 |

Arm Mali-G52 |

NPU |

1xMDLA 5.3 |

1xMDLA 5.3 |

1xMDLA3.0 + 1xVP6 |

1xMDLA3.0 + 1xVP6 |

2xMDLA2.0 + 1xVP6 |

1xVP6 |

Note

MDLA and VP6 together are collectively referred to as NPU. Please note that although Genio350 have VPU hardware, there is no software support on this platform.

GPU

The GPU provides neural network acceleration for floating point models.

NPU

The MediaTek AI Processing Unit (NPU) is a high-performance hardware engine for deep-learning, optimized for bandwidth and power efficiency. The NPU architecture consists of big, small, and tiny cores. This highly heterogeneous design is suited for a wide variety of modern smartphone tasks, such as AI-camera, AI-assistant, and OS or in-app enhancements. NPU refers collectively to both VPU and MDLA components.

MDLA

The MediaTek Deep Learning Accelerator (MDLA) is a powerful and efficient Convolutional Neural Network (CNN) accelerator. The MDLA is capable of achieving high AI benchmark results with high Multiply-Accumulate (MAC) utilization rates. The design integrates MAC units with dedicated function blocks, which handle activation functions, element-wise operations, and pooling layers.

VP6

The Vision Processing Unit (VPU) offers general-purpose Digital Signal Processing (DSP) capabilities, with special hardware for accelerating complex imaging and computer vision algorithms. The VPU also offers outstanding performance while running AI models.

Further details about the Genio AI software stack are provided in Software Architecture.