ONNX Runtime - Analytical AI

Important

ONNX Runtime support for Genio platforms is under active development. MediaTek currently provides more mature and comprehensive hardware-acceleration coverage for FP16 models. Support for QDQ (INT8) quantization is evolving, and performance will continue to improve as MediaTek expands operator coverage in quarterly updates.

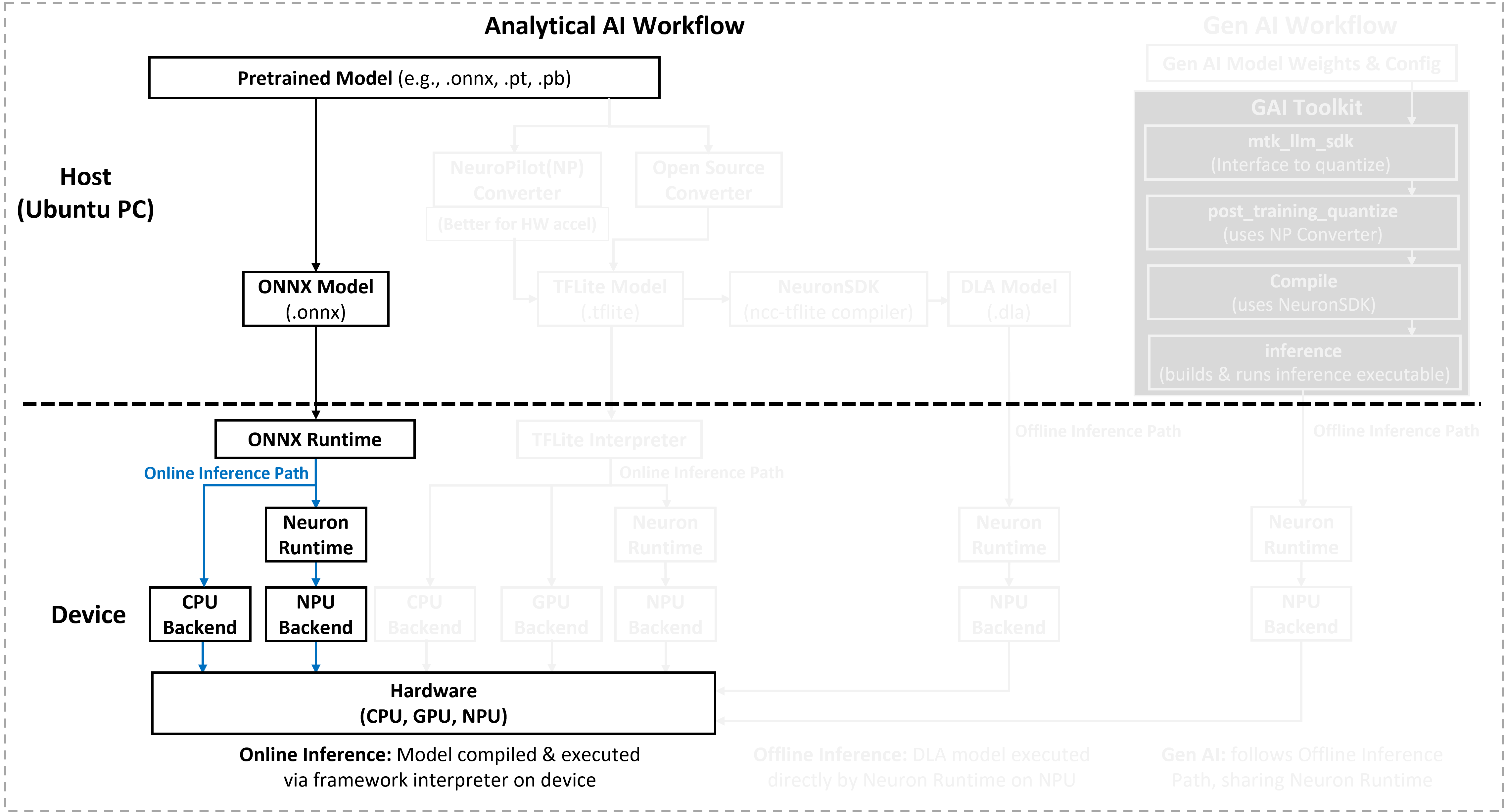

The resources in this section support the Online inference path for ONNX Runtime-analytical AI workloads. This section provides the necessary onnx models required to execute via the ONNX Runtime.

ONNX Runtime is available on MediaTek Genio platforms to accelerate ONNX models with hardware support.

High Performance: Utilize the Neuron Execution Provider (EP) on Genio 520 and 720 for NPU acceleration.

Broad Compatibility: Standard CPU EP execution is available across all Genio platforms.

FP16 Focus: FP16 models have the most complete hardware-acceleration support.

Quantized QDQ: QDQ models have partially supported with NPU acceleration.

For best performance and compatibility, deploy FP16 ONNX models whenever possible.

Performance Comparison

MediaTek provides a comprehensive matrix of performance data across different Genio platforms.

To view a quick summary of high-end platforms (G520/G720), see the tables below.

To compare performance across all platforms (G350, G510, G700, G1200), refer to the dedicated page:

Supported Models on Genio Products

The following tables list ONNX models that have been validated on Genio platforms. The current list contains 45 models, grouped into three model families:

TAO Related

Legacy Analytical

Robotic

If your model is not listed in the tables below, you are still welcome to try it. For questions or issues, post on the Genio Community forum.

Note

The performance statistics shown in these tables were measured using NPU Execution Provider with performance mode enabled across different Genio products, models, and data types.

Legacy Analytical Models

Legacy analytical models are classic vision backbones and networks widely used only for benchmarking and reference. The accuracy of the model is not addressed.

Task |

Model Name |

Data Type |

Input Size |

G520 NPU (ms) |

G520 CPU (ms) |

G720 NPU (ms) |

G720 CPU (ms) |

Pretrained Model |

Object Detection |

YOLOv5s |

Quant8 |

640x640 |

Not Support |

225.74 |

Not Support |

221.29 |

|

Object Detection |

YOLOv5s |

Float32 |

640x640 |

36.50 |

607.68 |

32.37 |

586.80 |

|

Object Detection |

YOLOv8s |

Quant8 |

640x640 |

90.11 |

353.19 |

80.57 |

346.58 |

|

Object Detection |

YOLO11s |

Quant8 |

640x640 |

102.15 |

301.50 |

90.99 |

295.32 |

Task |

Model Name |

Data Type |

Input Size |

G520 NPU (ms) |

G520 CPU (ms) |

G720 NPU (ms) |

G720 CPU (ms) |

Pretrained Model |

Classification |

ConvNeXt |

Quant8 |

224x224 |

Not Support |

516.21 |

Not Support |

1115.18 |

|

Classification |

ConvNeXt |

Float32 |

224x224 |

Not Support |

1117.20 |

Not Support |

510.37 |

|

Classification |

DenseNet |

Quant8 |

224x224 |

Not Support |

104.51 |

Not Support |

103.30 |

|

Classification |

DenseNet |

Float32 |

224x224 |

8.46 |

205.29 |

7.49 |

200.32 |

|

Classification |

EfficientNet |

Quant8 |

224x224 |

33.33 |

24.07 |

30.52 |

23.94 |

|

Classification |

EfficientNet |

Float32 |

224x224 |

3.15 |

66.64 |

2.81 |

65.57 |

|

Classification |

MobileNetV2 |

Quant8 |

224x224 |

1.43 |

12.36 |

1.26 |

12.23 |

|

Classification |

MobileNetV2 |

Float32 |

224x224 |

1.75 |

31.69 |

1.47 |

30.41 |

|

Classification |

MobileNetV3 |

Quant8 |

224x224 |

Not Support |

6.30 |

Not Support |

6.16 |

|

Classification |

MobileNetV3 |

Float32 |

224x224 |

13.72 |

10.74 |

12.81 |

10.45 |

|

Classification |

ResNet |

Quant8 |

224x224 |

2.04 |

45.87 |

1.78 |

45.08 |

|

Classification |

ResNet |

Float32 |

224x224 |

3.81 |

112.00 |

3.49 |

111.24 |

|

Classification |

SqueezeNet |

Quant8 |

224x224 |

9.36 |

33.08 |

8.38 |

31.96 |

|

Classification |

SqueezeNet |

Float32 |

224x224 |

9.86 |

53.15 |

8.81 |

52.00 |

|

Classification |

VGG |

Quant8 |

224x224 |

13.79 |

366.54 |

11.62 |

366.31 |

|

Classification |

VGG |

Float32 |

224x224 |

37.17 |

902.03 |

32.24 |

889.14 |

Task |

Model Name |

Data Type |

Input Size |

G520 NPU (ms) |

G520 CPU (ms) |

G720 NPU (ms) |

G720 CPU (ms) |

Pretrained Model |

Recognition |

VGGFace |

Quant8 |

224x224 |

291.44 |

366.43 |

291.22 |

367.59 |

|

Recognition |

VGGFace |

Float32 |

224x224 |

37.98 |

904.24 |

32.84 |

891.02 |

Robotic Models

Robotic models target robotic perception and control workloads, such as grasping, navigation, or policy learning. These models demonstrate the performance of ONNX Runtime on Genio platforms for specialized robotic tasks.

Task |

Model Name |

Data Type |

Input Size |

G520 NPU (ms) |

G520 CPU (ms) |

G720 NPU (ms) |

G720 CPU (ms) |

Pretrained Model |

Omni6DPose |

scale_policy |

Float32 |

1x3x3 |

Not Support |

0.18 |

Not Support |

0.17 |

|

Diffusion Policy |

model_diffusion_sampling |

Float32 |

trajectory:1x16x12, global_cond:1x800 |

60.66 |

44.71 |

57.68 |

41.96 |

|

MobileSam |

mobilesam_encoder |

Float32 |

3x448x448 |

Not Support |

705.14 |

Not Support |

694.74 |

|

RegionNormalizedGrasp |

anchornet |

Float32 |

4x640x360 |

13.42 |

187.66 |

12.13 |

183.82 |

|

RegionNormalizedGrasp |

localnet |

Float32 |

64x64x6 |

20.02 |

19.65 |

19.78 |

20.06 |

|

YoloWorld |

yoloworld_xl |

Float32 |

3x640x640 |

465.61 |

11466.42 |

403.15 |

11214.33 |

Performance Notes and Limitations

Note

The measurements were obtained using onnxruntime_perf_test, and each model’s performance can vary depending on:

The specific Genio platform and hardware configuration.

The version of the board image and evaluation kit (EVK).

The selected backend and model variant.

To obtain the most accurate performance numbers for your use case, you must run the application directly on the target platform.

Current Limitations

ONNX-GAI (generative AI) models are not officially supported at this time. Some proof-of-concept experiments exist internally, but they are not production-ready and are not part of the validated model list.

For the latest roadmap or early-access updates on ONNX-GAI support, please refer to the Genio Community forum.