AI Development Resources

The IoT AI ecosystem combines tools, documentation, demo applications, and community resources to help developers build and deploy AI workloads on IoT platforms. To deploy AI workloads on Genio platforms requires the NeuroPilot (NP).

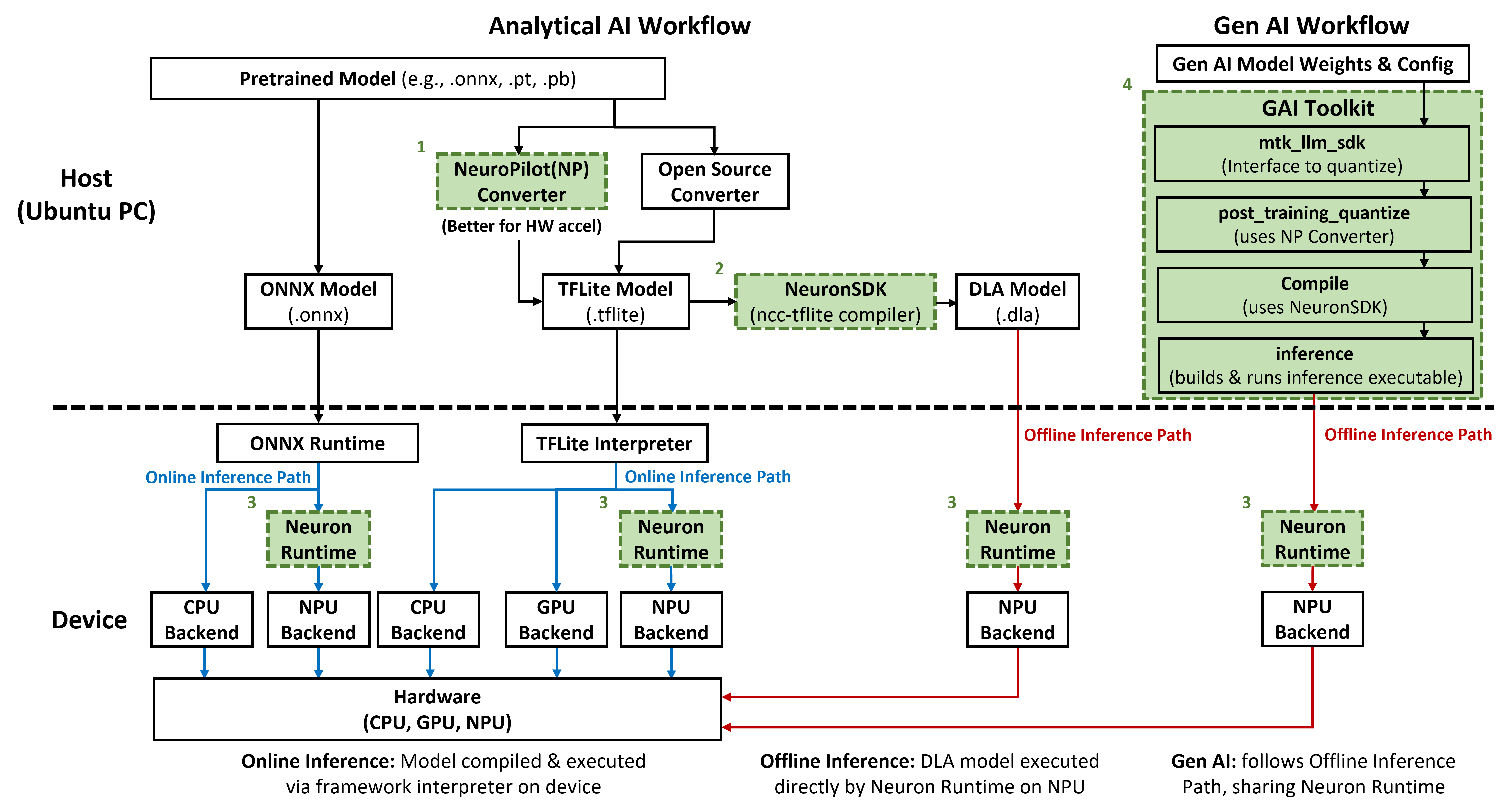

NeuroPilot (NP) is a comprehensive collection of software tools, runtimes, and APIs designed to facilitate the development and deployment of efficient AI applications on MediaTek platforms. While NeuroPilot supports a wide range of MediaTek products, Genio IoT platforms utilize a specific subset of these capabilities tailored for edge AI inference. These supported features primarily include:

Model quantization via the NP Converter (1),

Model compilation for the NPU via the ncc-tflite (2)

Model execution through the Neuron Runtime (3).

Specialized tools required for quantizing and deploying large models through the GAI Toolkit (4).

Understanding the Software-Hardware Binding

On Genio platforms, the NeuroPilot version is tightly coupled to the version of the MediaTek Deep Learning Accelerator (MDLA) hardware integrated into the System-on-Chip (SoC). This relationship has the following implications:

Fixed NP Versions: The NP version (e.g. NP6, NP8)is fixed for the lifetime of the SoC and cannot be upgraded independently of the platform. It also implies he NPU operator set is fixed for the lifetime of each SoC.

Platform Specificity: Each MDLA generation (e.g., MDLA 5.3, 3.0, 2.0) has a unique set of supported operations and hardware restrictions.

Version-Matched Tools: Developers must use the specific SDKs and host-side tools (converters and compilers) that match the NP version of their target hardware.

AI Frameworks and NeuroPilot Dependency

NeuroPilot is designed primarily around the TFLite ecosystem. The requirement for NeuroPilot tools depends on the chosen AI framework and workflow:

TFLite Path (Analytical AI): Requires NeuroPilot. Developers must use the NP Converter for quantization and the Neuron SDK (compiler and runtime) to enable NPU hardware acceleration.

Generative AI (GAI) Path: Requires NeuroPilot. GAI workloads on Genio are built upon the NeuroPilot stack and require the GAI Toolkit for deployment.

ONNX Runtime Path: Does not require the NeuroPilot proprietary toolchain. This path utilizes the open-source ONNX Runtime and its corresponding execution providers.

The following table summarizes the supported combinations of Platform, Operating System, NPU Hardware (MDLA) and Software Version(NP), and AI Framework.

Platform |

OS |

NP Version |

MDLA Version |

TFLite - Analytical AI (Online) |

TFLite - Analytical AI (Offline) |

TFLite - Generative AI |

ONNX Runtime - Analytical AI |

Genio 520/720 |

Android |

8 |

5.3 |

CPU + GPU + NPU |

NPU |

NPU |

CPU + NPU |

Yocto |

8 |

5.3 |

CPU + GPU + NPU |

NPU |

X (ETA: 2026/Q2) |

CPU + NPU |

|

Genio 510/700 |

Android |

6 |

3 |

CPU + GPU + NPU |

NPU |

X |

X |

Yocto |

6 |

3 |

CPU + GPU + NPU |

NPU |

X |

CPU |

|

Ubuntu |

6 |

3 |

CPU + GPU + NPU |

NPU |

X |

X |

|

Genio 1200 |

Android |

6 |

2 |

CPU + GPU + NPU |

NPU |

X |

X |

Yocto |

6 |

2 |

CPU + GPU + NPU |

NPU |

X |

CPU |

|

Ubuntu |

6 |

2 |

CPU + GPU + NPU |

NPU |

X |

X |

|

Genio 350 |

Android |

4 |

X |

CPU + GPU + NPU |

X |

X |

X |

Yocto |

4 |

X |

CPU + GPU |

X |

X |

CPU |

|

Ubuntu |

4 |

X |

CPU + GPU |

X |

X |

X |

SDKs and Tools by Platform

Developer should Select the appropriate tools, documentation, and demo applications based on the platform’s NeuroPilot and MDLA version.

Important

Access to Android AI documentation, tools, and OS images is limited to Direct Customers with a valid MediaTek Online (MOL) account. A MediaTek Developer account only provides access to the Genio Developer Center and does not grant access to NeuroPilot SDK downloads or Android images. For a detailed mapping of account types and AI framework access on each operating system, refer to User Roles and Access Levels.

Genio 520 / 720 (NeuroPilot 8 + MDLA 5.3)

Tools

Resource |

Supported OS |

Access Level |

Description |

Link |

|---|---|---|---|---|

NP8 SDK All-In-One Bundle |

Android, Yocto |

NDA |

Contains the NP8 Converter and NP8 Compiler(ncc-tflite) for host-side model preparation. |

|

Neuron8 SDK |

Android |

NDA |

Provides the |

|

GAI Toolkit |

Android |

NDA |

Provides an end-to-end toolkit to quantize and deploy Generative AI models. |

Documentation

Resource |

Supported OS |

Access Level |

Description |

Link |

|---|---|---|---|---|

NeuroPilot8 Android User Guide |

Android |

NDA |

End-to-end guide for TFLite and NeuroPilot on Android, covering online and offline flows. |

|

Yocto AI User Guide |

Yocto |

Public |

Comprehensive instructions for integrating and using TFLite and ONNX Runtime on IoT Yocto. |

|

NeuroPilot8 Converter User Guide |

Android, Yocto |

NDA |

Detailed usage of the NP8 converter, including model preparation and quantization. |

|

TFLite Supported Operations |

Android, Yocto |

Public |

List of TFLite operators supported by the NP8 hardware and software stack. |

|

ONNX Supported Operations |

Yocto |

Public |

Under planning (Target: 2026 Q1). |

Comming Soon |

Demo Applications

Resource |

Supported OS |

Access Level |

Description |

Link |

|---|---|---|---|---|

Android NP8 Demo |

Android |

NDA |

Sample applications demonstrating TFLite and NeuroPilot usage on Android devices. |

|

Yocto AI Examples |

Yocto |

Public |

Built-in command-line examples for TFLite and ONNX Runtime on Genio Yocto images. |

Genio 510 / 700 (NeuroPilot 6 + MDLA 3.0)

Tools

Resource |

Supported OS |

Access Level |

Description |

Link |

|---|---|---|---|---|

NP8 SDK All-In-One Bundle |

Android, Yocto, Ubuntu |

NDA |

Recommended for model conversion. Set |

|

NP6 SDK All-In-One Bundle |

Android, Yocto, Ubuntu |

NDA |

Contains the NP6 Compiler(ncc-tflite) for MDLA 3.0 model compilation. |

|

Neuron6 SDK |

Android |

NDA |

Provides the |

Documentation

Resource |

Supported OS |

Access Level |

Description |

Link |

|---|---|---|---|---|

NeuroPilot6 Android User Guide |

Android |

NDA |

Comprehensive guide for deploying AI models on NP6-based Android platforms. |

|

Yocto AI User Guide |

Yocto |

Public |

Comprehensive instructions for integrating and using TFLite and ONNX Runtime on IoT Yocto. |

|

NeuroPilot6 Converter User Guide |

Android, Yocto, Ubuntu |

NDA |

Instructions for using NP6 host tools to prepare and quantize TFLite models. |

|

TFLite Supported Operations |

Android, Yocto, Ubuntu |

Public |

List of TFLite operators supported by the NP6 stack on Genio 510/700. |

|

ONNX Supported Operations |

Yocto |

Public |

Under planning (Target: 2026 Q1). |

Comming Soon |

Demo Applications

Resource |

Supported OS |

Access Level |

Description |

Link |

|---|---|---|---|---|

Android NP6 Demo |

Android |

NDA |

Reference applications for TFLite and NeuroPilot optimized for NP6. |

|

Yocto AI Examples |

Yocto |

Public |

Built-in in the IoT Yocto demo image with command-line examples for TFLite(LiteRT) and ONNX Runtime. |

Genio 1200 (NeuroPilot 6 + MDLA 2.0)

Tools

Resource |

Supported OS |

Access Level |

Description |

Link |

|---|---|---|---|---|

NP8 SDK All-In-One Bundle |

Android, Yocto, Ubuntu |

NDA |

Recommended for model conversion. Set |

|

NP6 SDK All-In-One Bundle |

Android, Yocto, Ubuntu |

NDA |

Uses the same NP6 host tools as Genio 700 to target MDLA 2.0. |

|

Neuron6 SDK |

Android |

NDA |

Provides the |

Documentation

Resource |

Supported OS |

Access Level |

Description |

Link |

|---|---|---|---|---|

NeuroPilot6 Android User Guide |

Android |

NDA |

Comprehensive guide for deploying AI models on NP6-based Android platforms. |

|

Yocto AI User Guide |

Yocto |

Public |

Comprehensive instructions for integrating and using TFLite and ONNX Runtime on IoT Yocto. |

|

NeuroPilot6 Converter User Guide |

Android, Yocto, Ubuntu |

NDA |

Instructions for using NP6 host tools to prepare and quantize TFLite models. |

|

TFLite Supported Operations |

Android, Yocto, Ubuntu |

Public |

List of TFLite operators supported by the NP6 stack on Genio 1200. |

|

ONNX Supported Operations |

Yocto |

Public |

Under planning (Target: 2026 Q1). |

Comming Soon |

Demo Applications

Resource |

Supported OS |

Access Level |

Description |

Link |

|---|---|---|---|---|

Android NP6 Demo |

Android |

NDA |

Reference applications for TFLite and NeuroPilot optimized for NP6. |

|

Yocto AI Examples |

Yocto |

Public |

Built-in command-line examples for TFLite and ONNX Runtime on Genio Yocto images. |

Genio 350 (NeuroPilot 4)

Note

Genio 350 supports online inference only. Offline inference (compiling models to DLA format) is not supported on this platform.

Documentation

Resource |

Supported OS |

Access Level |

Description |

Link |

|---|---|---|---|---|

NeuroPilot4 Android User Guide |

Android |

NDA |

Documentation covering TFLite and NeuroPilot 4 integration on Genio 350. |

|

Yocto AI User Guide |

Yocto |

Public |

Comprehensive instructions for integrating and using TFLite and ONNX Runtime on IoT Yocto. |

|

TFLite Supported Operations |

Android, Yocto, Ubuntu |

Public |

List of TFLite operators supported by the NP4 stack on Genio 350. |

|

ONNX Supported Operations |

Yocto |

Public |

Under planning (Target: 2026 Q1). |

Comming Soon |

Demo Applications

Resource |

Supported OS |

Access Level |

Description |

Link |

|---|---|---|---|---|

Android NP4 Demo |

Android |

NDA |

Reference applications for TFLite and NeuroPilot optimized for NP4. |

|

Yocto AI Examples |

Yocto |

Public |

Built-in command-line examples for TFLiteand ONNX Runtime on Genio Yocto images. |

Using the SDK All-In-One Bundle and Neuron SDK

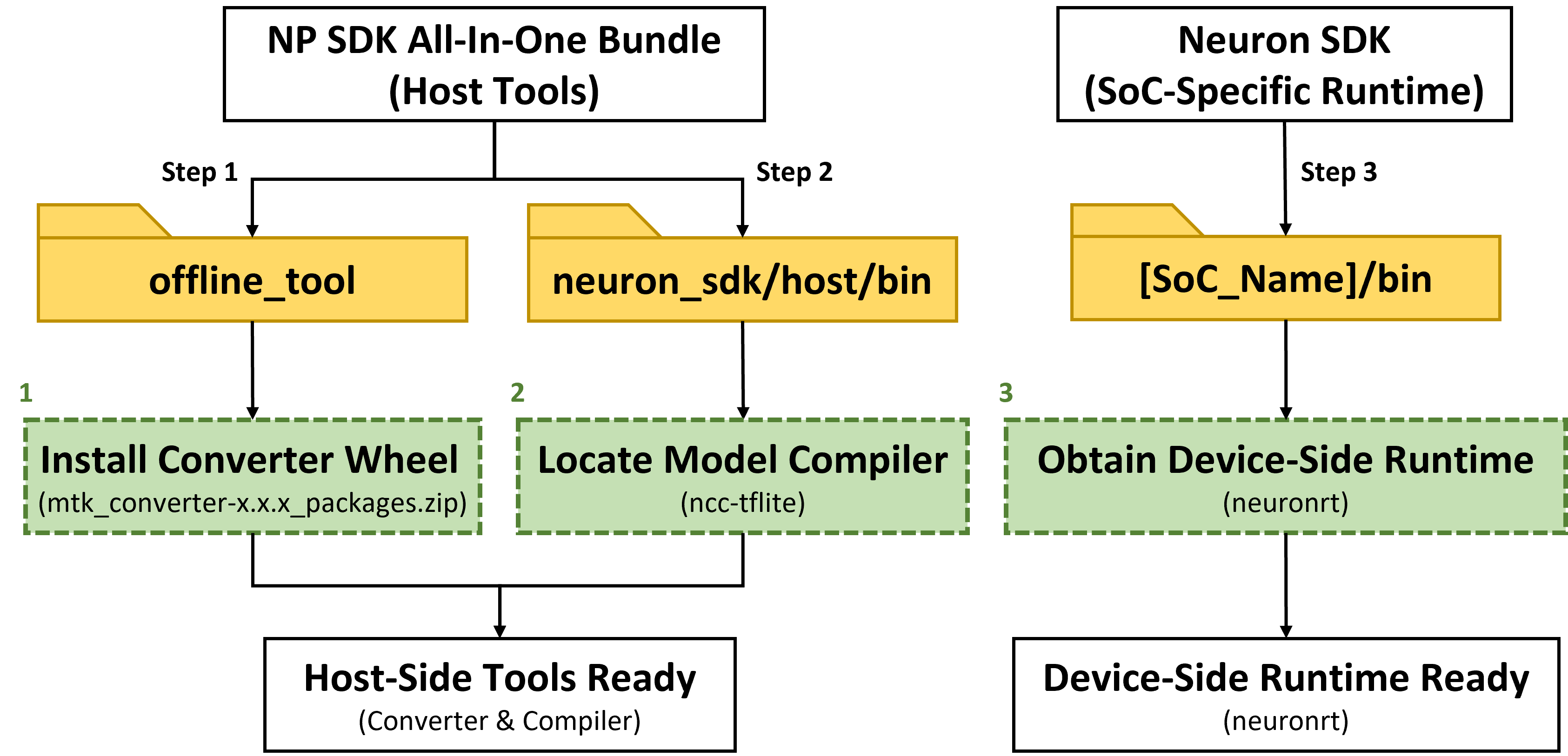

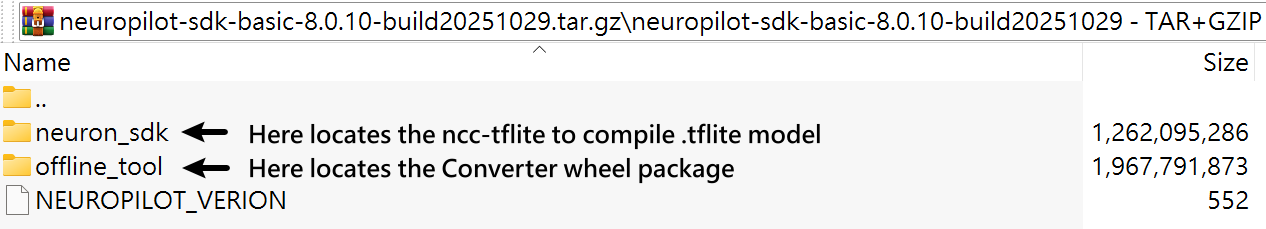

The SDK All-In-One Bundle and Neuron SDK provide the necessary offline tools for AI development. This bundle is required only for TFLite and Generative AI workflows. It is not used for ONNX Runtime development.

Although the bundle includes various utilities, IoT AI workflows primarily utilize the following components:

offline_tool: Contains the

Converter. Use this tool to convert and quantize models into TFLite or NP-compatible formats. (Note:QuantizerandMLKitsin this folder are for smartphone SoCs and are not supported for Genio).neuron_sdk: Provides the host-side

ncc-tflitecompiler. This tool compiles converted models into Deep Learning Archive (DLA) binaries.

The following flowchart summarizes the workflow for extracting, installing, and using the tools:

The following steps describe how to locate and use these tools:

Install the converter from the All-In-One Bundle.

Install the converter

.whlfromoffline_tool.

Note

While you can use the converter version that matches your target platform, it is recommended to use the NP8 Converter with

tflite_op_export_spec='npsdk_v6'for backward compatibility. Newer tool versions provide enhanced optimizations and broader operator support.

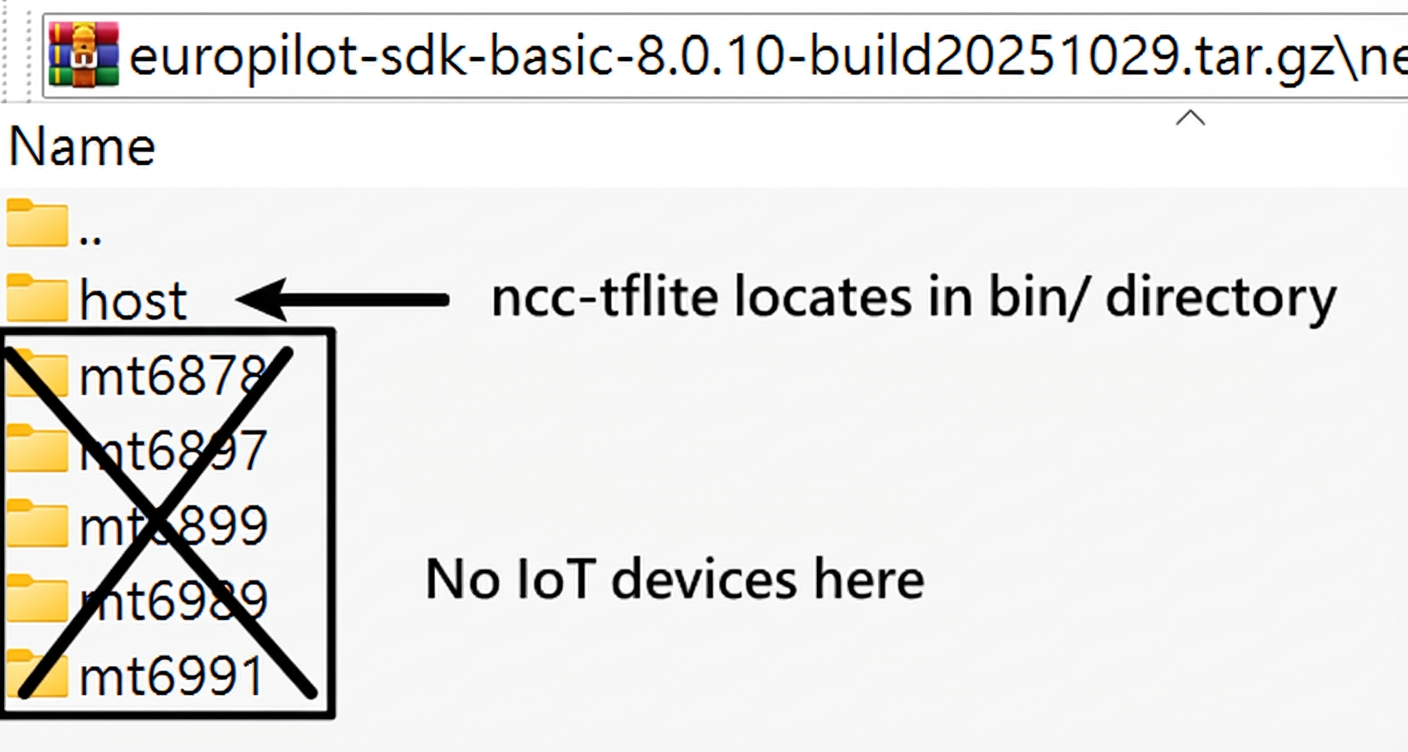

Locate the host compiler tools in the All-In-One Bundle.

Use the

neuron_sdk/host/bindirectory in the All-In-One Bundle to access the compiler tools. Mobile SoC-specific folders in this bundle are not used for Genio IoT devices.

Note

The NP8 and NP6

ncc-tflitecompilers are not interchangeable. Use thencc-tflitebinary that matches the target NP generation.

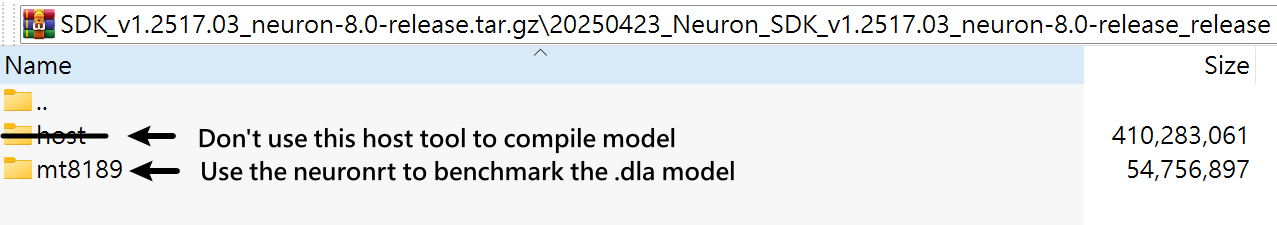

Obtain the device-side runtime from the Neuron SDK.

The Neuron SDK (separate from the All-In-One bundle) provides the

neuronrtruntime for Android devices. Use the SoC-specific directory (e.g.,mt8189/bin/neuronrt) to run and benchmark.dlamodels on the target hardware. Note that IoT Yocto users do not need to download this runtime separately, as it is already built into the system image. Do not use the Neuron SDK’shostdirectory for model compilation. Always use the compiler from the All-In-One Bundle instead.Download Portals

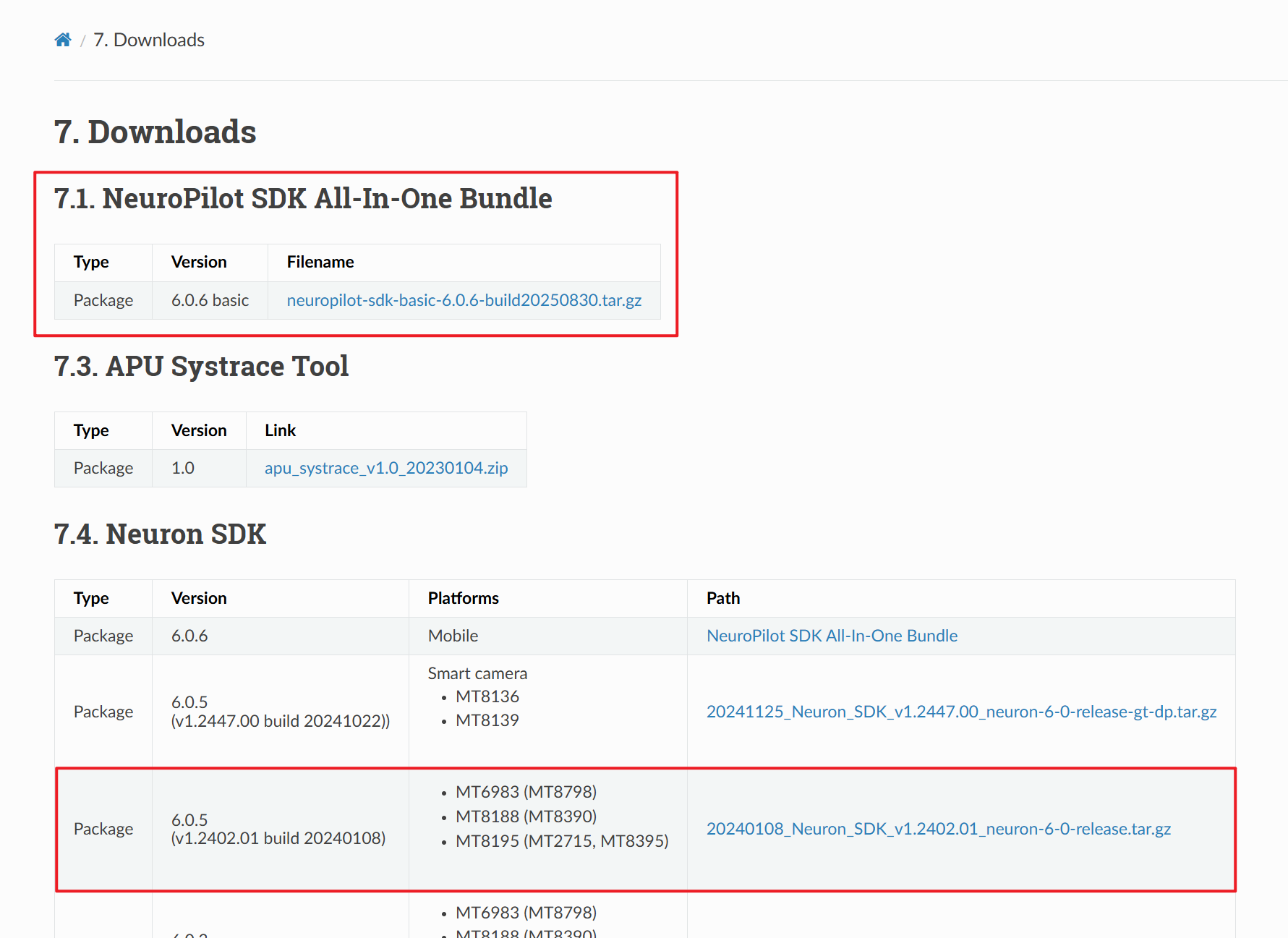

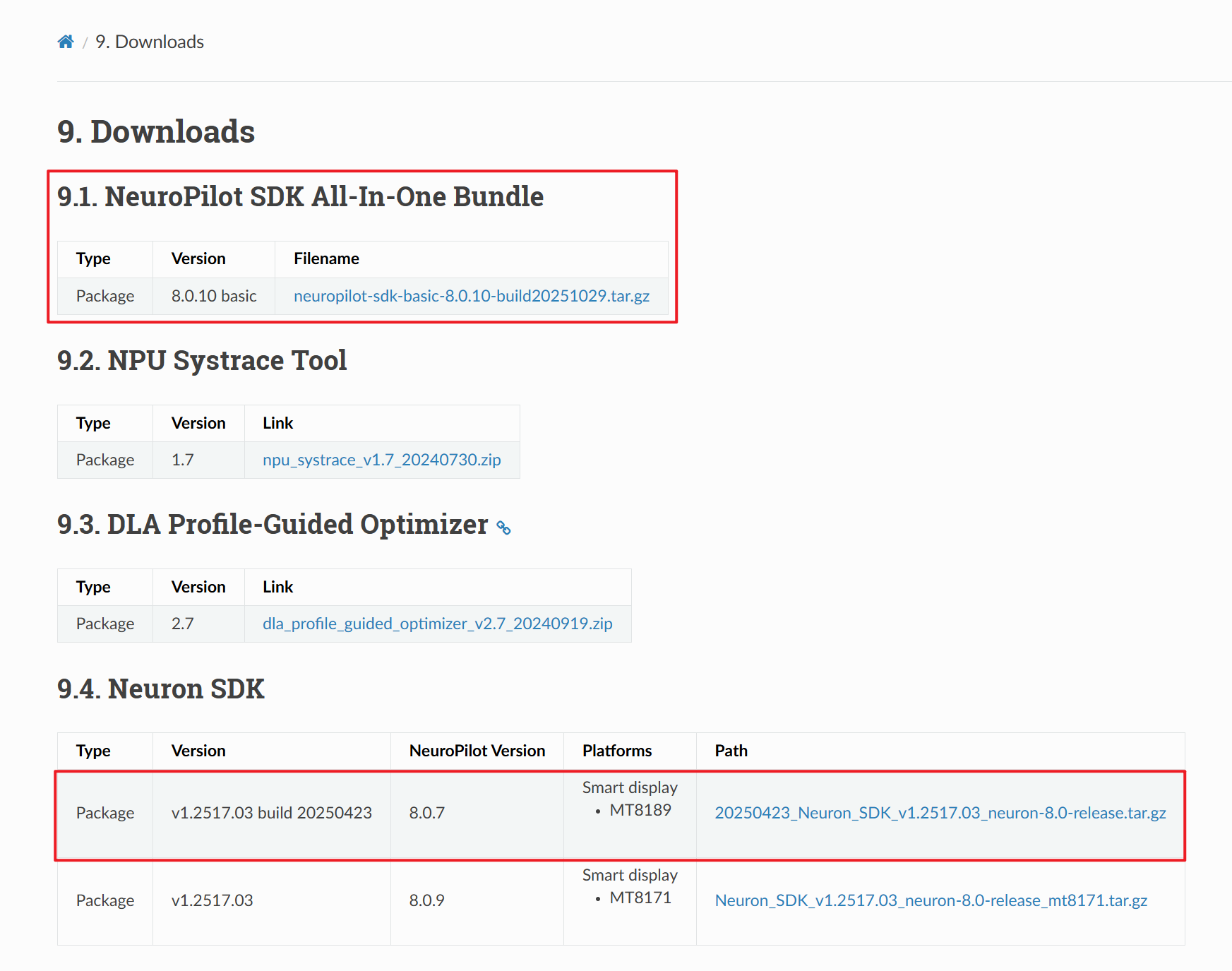

MediaTek maintains separate download portals for different NeuroPilot generations. The following figures show example pages for NP6 and NP8.

Example NeuroPilot download pages

NeuroPilot 6 All-In-One Bundle and Neuron SDK

NeuroPilot 8 All-In-One Bundle and Neuron SDK

Forum and OS Images

The following resources provide common support channels and the foundational software environment required for all Genio platforms.

Genio Forum: The primary community-driven support channel. Developers can find troubleshooting tips, share project experiences, and receive updates on the AI software stack.

OS Images: MediaTek provides reference operating system images for Evaluation Kits (EVK). These images include the necessary drivers, firmware, and pre-integrated AI runtimes (such as TFLite or ONNX Runtime) to begin development immediately.

Resource

Supported Platform

Access Level

Description

Link

Genio Forum

All Genio Platforms

Public

Community forum for Genio hardware, AI software stack, and troubleshooting.

Android OS Image for EVK

All Genio Platforms

Public

Pre-built Android images for Genio EVK boards with integrated AI stack.

Yocto OS Image for EVK

All Genio Platforms

Public

Reference Yocto images for Genio EVK boards, including TFLite support.

Ubuntu OS Image for EVK

Genio 510/700/1200/350

Public

Ubuntu images for evaluation and desktop-style development on Genio platforms.