Model Zoo

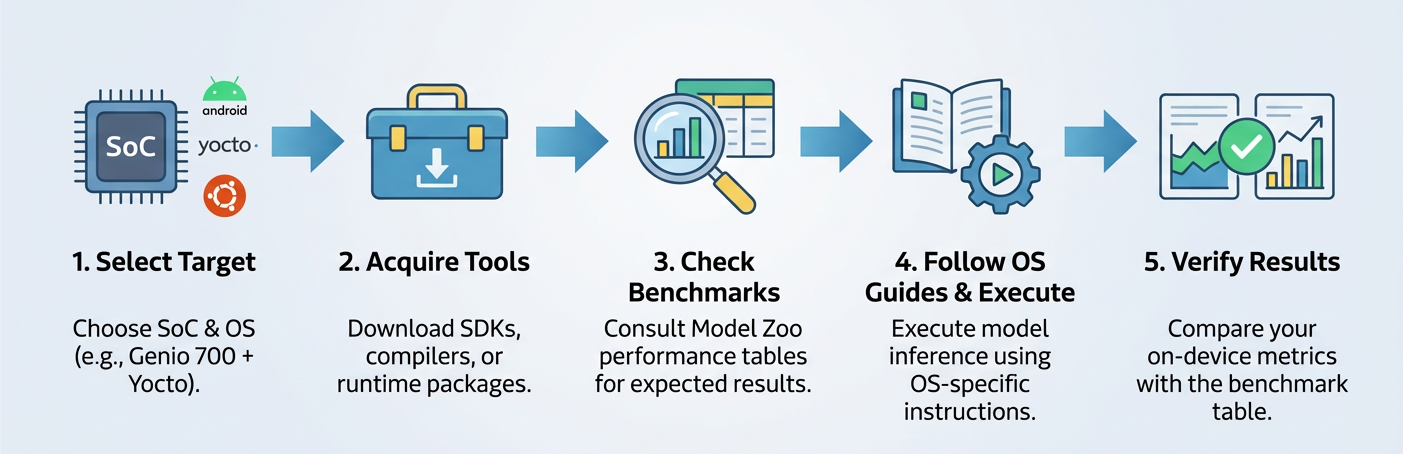

The Model Zoo provides ready-to-use deep learning models that are validated on MediaTek Genio platforms. It is designed to help developers:

Quickly evaluate AI inference performance on different hardware backends (CPU, GPU, and NPU).

Compare inference frameworks (TFLite, Neuron Runtime, ONNX Runtime) and delegates available on each platform.

Reuse models and example flows as a starting point for your own applications.

Important

The Model Zoo is a reference resource. All models in this section are intended for benchmarking and capability validation only (for example, to confirm framework and hardware accelerator support). Model accuracy is not addressed. For production deployment, developers must obtain and train models from your original sources according to the requirements of your applications.

Before using any Model Zoo content, make sure that the target board and operating system are supported for the selected framework.

Different models may be available on different combinations of:

System-on-Chip (SoC) families,

Operating systems (Android, Yocto and Ubuntu), and

Inference frameworks (TFLite, Neuron Runtime, and ONNX Runtime).

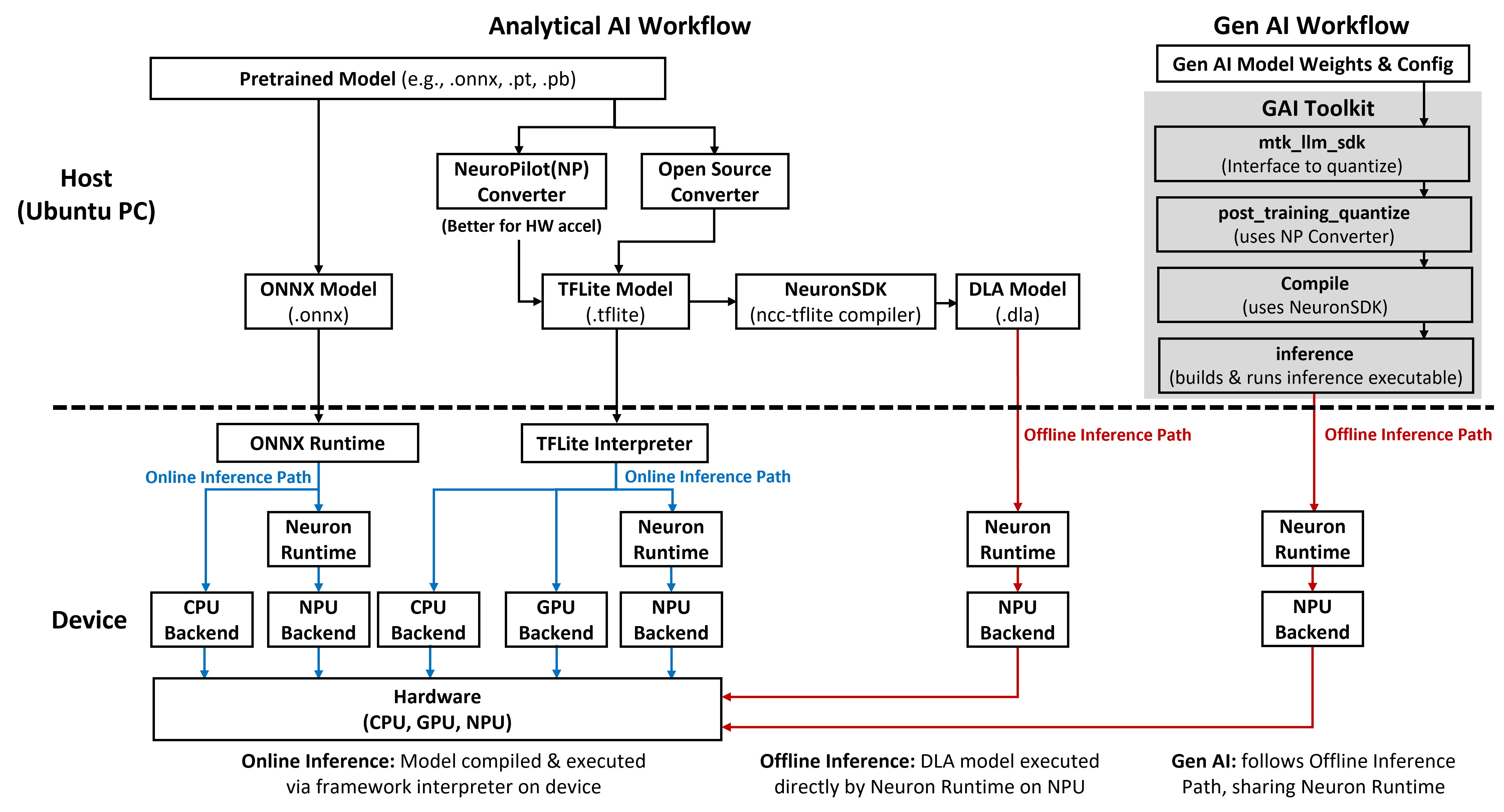

The following software architecture and supporting scope summary provide a quick recap of AI capabilities across Genio platforms. For detailed component descriptions , see Software Architecture. To obtain the required SDKs and host tools, refer to AI Development Resources.

Platform |

OS |

TFLite - Analytical AI (Online) |

TFLite - Analytical AI (Offline) |

TFLite - Generative AI |

ONNX Runtime - Analytical AI |

Genio 520/720 |

Android |

CPU + GPU + NPU |

NPU |

NPU |

CPU + NPU |

Yocto |

CPU + GPU + NPU |

NPU |

X (ETA: 2026/Q2) |

CPU + NPU |

|

Genio 510/700 |

Android |

CPU + GPU + NPU |

NPU |

X |

X |

Yocto |

CPU + GPU + NPU |

NPU |

X |

CPU |

|

Ubuntu |

CPU + GPU + NPU |

NPU |

X |

X |

|

Genio 1200 |

Android |

CPU + GPU + NPU |

NPU |

X |

X |

Yocto |

CPU + GPU + NPU |

NPU |

X |

CPU |

|

Ubuntu |

CPU + GPU + NPU |

NPU |

X |

X |

|

Genio 350 |

Android |

CPU + GPU + NPU |

X |

X |

X |

Yocto |

CPU + GPU |

X |

X |

CPU |

|

Ubuntu |

CPU + GPU |

X |

X |

X |

Model Zoo Structure

Important

Before using the Model Zoo, developers should review AI Software Architecture, which describes the different AI inference paths in detail. This background helps align model selection, benchmarking, and deployment with the appropriate inference workflow on Genio platforms.

The Model Zoo is organized into the following sections:

TFLite(LiteRT) - Analytical AI

The TFLite section documents models that use TensorFlow Lite–based runtimes and delegates, including:

Baseline CPU inference

GPU delegates

Neuron Stable Delegate paths that leverage MediaTek AI accelerators for online inference, and

Neuron SDK–based offline inference paths using compiled Deep Learning Archive (DLA) models on the NPU (for example, MDLA and VP6).

Typical contents include:

Pre-converted TFLite models and Pre-compiled (for example, MobileNet, SSD, YOLOv5s variants).

Example command lines for performance benchmarking (such as

benchmark_model).Representative performance results per platform.

Cross-references to Neuron tools and workflows for compiling TFLite models to DLA and running them via Neuron Runtime.

For details, see:

TFLite(LiteRT) - Generative AI

The Generative AI section summarizes performance and capability data for text, image, and multimodal generative workloads on Genio platforms. This includes, depending on platform support:

Large language models (LLMs),

Vision-language models, and

Image generation or enhancement models.

Note

For Generative AI, the Model Zoo currently provides performance and capability data only. Developers who require Generative AI model deployment or accuracy tuning must contact their MediaTek representative. Access to the full Generative AI deployment toolkit requires a non-disclosure agreement (NDA) with MediaTek. After signing an NDA, the toolkit can be downloaded from: NeuroPilot Document.

For details, see:

ONNX Runtime

The ONNX Runtime section focuses on models deployed via the ONNX Runtime on Genio platforms. Currently, this section provides:

Pretrained ONNX models for benchmarking and evaluation.

Representative performance data specifically for Genio 520 and Genio 720.

Note

ONNX Runtime support for Genio platforms is under active development. MediaTek will provide feature enhancements and expanded model coverage on a quarterly basis.

For details, see: