TFLite(LiteRT) - Generative AI

Important

MediaTek currently supports Generative AI (GAI) only on the Android OS. The performance results and model capabilities described in this section represent the Android software stack. Genio 520 and Genio 720 Yocto platforms will support GAI in 2026 Q2.

The Generative Model section provides performance and capability data for large language models (LLMs), vision-language models (VLMs) and image generation models on MediaTek Genio platforms.

This section is intended as a reference for benchmarking and platform capability validation, not as a distribution channel for full training or deployment assets.

Note

For Generative AI workloads, this section provides performance data and capability information only.

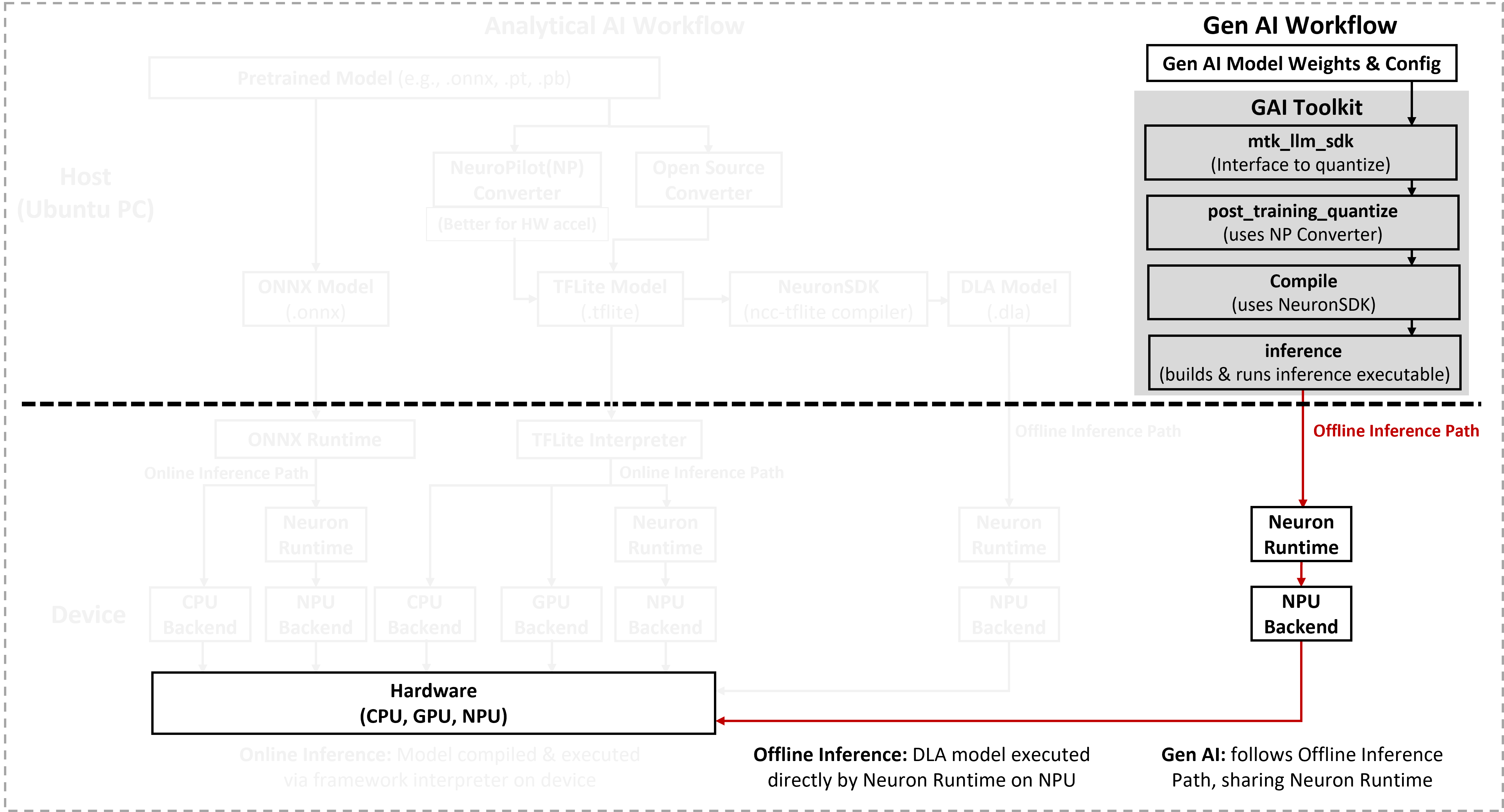

Access to the full Generative AI deployment toolkit (GAI toolkit) requires a non-disclosure agreement (NDA) with MediaTek. After signing an NDA, the toolkit can be downloaded from NeuroPilot Document.

Model Categories

The generative models in this section are grouped into the following categories:

Large Language Models (LLMs) – Text-only models for tasks such as dialogue, summarization, and code generation.

Vision-Language Models (VLMs) – Multimodal models that process both images and text (for example, image captioning or visual question answering).

Image Generation and Enhancement – Models such as Stable Diffusion and other diffusion or transformer-based pipelines used for image synthesis, editing, or super-resolution.

Embedding and Encoder Models – Models like CLIP encoders for computing joint image-text embeddings for retrieval or ranking tasks.

Supported Models on Genio Products

Platform-specific model lists and performance data are provided in the following pages:

Performance Notes and Limitations

For Generative AI workloads, measured performance on Genio 520 may be slightly lower than on Genio 720.

This gap is primarily due to DRAM bandwidth differences between the two platforms and might affect:

Token generation speed for LLMs.

End-to-end latency for diffusion-based image generation.

Multimodal pipelines that exchange large intermediate tensors between subsystems.

The following comparative data is provided for reference.

Important

The tables in this section provide representative numbers only. To obtain the most accurate performance for a specific use case, developers must deploy and run the workload directly on the target platform under the intended system configuration.

LLM Performance Comparison

Model |

Genio 720 |

Genio 520 |

MT8893 |

|---|---|---|---|

DeepSeek-R1-Distill-Llama-8B |

36.65 |

Not Support |

425.79 |

DeepSeek-R1-Distill-Qwen-1.5B |

341.69 |

276.82 |

1057.25 |

DeepSeek-R1-Distill-Qwen-7B |

69.23 |

Not Support |

448.17 |

gemma2-2b-it |

193.39 |

Not Support |

891 |

gemma3-1B (Text Only) |

603.15 |

Not Tested |

Not Tested |

gemma3-4B (Text-Only) |

156.89 |

Not Tested |

Not Tested |

internlm2-chat-1.8b |

276.22 |

243.61 |

1544.7 |

llama3-8b |

56.5 |

Not Support |

426.13 |

llama3.2-1B-Instruct |

401.29 |

335.92 |

2093.61 |

llama3.2-3B-Instruct |

154.56 |

Not Support |

1022.95 |

Qwen2-0.5B-Instruct |

762.46 |

641.7 |

3010.84 |

Qwen2-1.5B-Instruct |

341.99 |

274.5 |

1616.22 |

Qwen2-7B-Instruct |

70.42 |

Not Support |

474.38 |

Qwen1.5-1.8B-Chat |

310.64 |

268.23 |

1516.5 |

Qwen2.5-1.5B-Instruct |

341.42 |

269.87 |

1621.85 |

Qwen2.5-3B-Instruct |

162.48 |

Not Support |

751.06 |

Qwen2.5-7B-Instruct |

70.55 |

Not Support |

471.95 |

Qwen3 1.7B |

233.03 |

229.63 |

1069.16 |

Phi-3-mini-4k-instruct |

129.6 |

Not Support |

828.87 |

MiniCPM-2B-sft-bf16-llama-format |

194.79 |

153.14 |

886.72 |

medusa_v1_0_vicuna_7b_v1.5 |

91.82 |

Not Support |

501.05 |

vicuna1.5-7b-tree-speculative-decoding-plus |

84.9 |

Not Support |

454.58 |

llava1.5-7b-speculative-decoding |

73.1 |

Not Support |

267.98 |

baichuan-7b-int8-cache |

81.18 |

Not Support |

561.76 |

baichuan-7b |

79.75 |

Not Support |

536.64 |

Model |

Genio 720 |

Genio 520 |

MT8893 |

|---|---|---|---|

DeepSeek-R1-Distill-Llama-8B |

4.58 |

Not Support |

11.36 |

DeepSeek-R1-Distill-Qwen-1.5B |

11.76 |

9.18 |

25.68 |

DeepSeek-R1-Distill-Qwen-7B |

4.68 |

Not Support |

11.69 |

gemma2-2b-it |

8.75 |

Not Support |

21.37 |

gemma3-1B (Text Only) |

26.49 |

Not Tested |

Not Tested |

gemma3-4B (Text-Only) |

8.01 |

Not Tested |

Not Tested |

internlm2-chat-1.8b |

17.55 |

14.48 |

42.39 |

llama3-8b |

4.7 |

Not Support |

11.51 |

llama3.2-1B-Instruct |

24.53 |

20.59 |

61.14 |

llama3.2-3B-Instruct |

10.58 |

Not Support |

25.05 |

Qwen2-0.5B-Instruct |

50.06 |

42.43 |

77.87 |

Qwen2-1.5B-Instruct |

19.56 |

15.32 |

38.31 |

Qwen2-7B-Instruct |

4.88 |

Not Support |

11.64 |

Qwen1.5-1.8B-Chat |

9.9 |

8.63 |

31.38 |

Qwen2.5-1.5B-Instruct |

18.43 |

15.11 |

38.57 |

Qwen2.5-3B-Instruct |

10.31 |

Not Support |

20.87 |

Qwen2.5-7B-Instruct |

4.89 |

Not Support |

11.74 |

Qwen3 1.7B |

10.91 |

10.53 |

23.42 |

Phi-3-mini-4k-instruct |

7.32 |

Not Support |

18.87 |

MiniCPM-2B-sft-bf16-llama-format |

7.69 |

6.48 |

22.28 |

medusa_v1_0_vicuna_7b_v1.5 |

10.56 |

Not Support |

22.79 |

vicuna1.5-7b-tree-speculative-decoding-plus |

12.65 |

Not Support |

22.72 |

llava1.5-7b-speculative-decoding |

7.28 |

Not Support |

6.78 |

baichuan-7b-int8-cache |

4.24 |

Not Support |

11.37 |

baichuan-7b |

4.18 |

Not Support |

10.56 |

VLM Performance Comparison

Model |

Genio 720 |

Genio 520 |

MT8893 |

|---|---|---|---|

Qwen2.5 VL 3B |

0.21 |

Not Support |

0.10 |

InternVL3-1B |

1.74 |

1.94 |

0.51 |

Model |

Genio 720 |

Genio 520 |

MT8893 |

|---|---|---|---|

Qwen2.5 VL 3B |

100.07 |

Not Support |

339.90 |

InternVL3-1B |

74.75 |

65.23 |

183.64 |

Model |

Genio 720 |

Genio 520 |

MT8893 |

|---|---|---|---|

Qwen2.5 VL 3B |

4.78 |

Not Support |

10.13 |

InternVL3-1B |

6.16 |

4.52 |

14.09 |

Stable Diffusion Performance Comparison

Model |

Genio 720 |

Genio 520 |

MT8893 |

|---|---|---|---|

Stable Diffusion v.1.5 |

25816 |

33754 |

7075 |

Stable Diffusion v.1.5 controlnet |

33642 |

47923 |

9395 |

Stable_diffusion_v1_5_controlnet_lora |

34148 |

Not Support |

10268 |

Stable_diffusion_v1.5_2lora |

35978 |

45619 |

11487 |

Stable Diffusion v2.1 base model with controlnet |

31183 |

Not Support |

6969 |

Stable Diffusion v1.5 LCM Ipadaptor |

10645 |

11378 |

2254 |

Stable_diffusion_lcm_multiDiffusion |

29104 |

34731 |

7439 |

Model |

Genio 720 |

Genio 520 |

MT8893 |

|---|---|---|---|

Stable Diffusion v.1.5 |

24813 |

29001 |

6132 |

Stable Diffusion v.1.5 controlnet |

32294 |

37581 |

8035 |

Stable_diffusion_v1_5_controlnet_lora |

32454 |

Not Support |

8472 |

Stable_diffusion_v1.5_2lora |

33195 |

39198 |

10130 |

Stable Diffusion v2.1 base model with controlnet |

29828 |

Not Support |

5451 |

Stable Diffusion v1.5 LCM Ipadaptor |

5861 |

4978 |

1077 |

Stable_diffusion_lcm_multiDiffusion |

28127 |

32585 |

6698 |

CLIP Performance Comparison

Model |

Genio 720 |

Genio 520 |

MT8893 |

|---|---|---|---|

img_encoder_proj_clip_vit_large_dynamic |

567.61 |

662.22 |

358.61 |

img_encoder_proj_openclip_vit_big_g_dynamic |

12035.52 |

Not Support |

1390.56 |

img_encoder_proj_openclip_vit_h_dynamic |

1440.20 |

Not Support |

591.93 |

text_encoder_clip_vit_large |

455.08 |

748.45 |

308.72 |

text_encoder_openclip_vit_h |

750.70 |

Not Support |

510.92 |

Model |

Genio 720 |

Genio 520 |

MT8893 |

|---|---|---|---|

img_encoder_proj_clip_vit_large_dynamic |

257.39 |

296.84 |

51.14 |

img_encoder_proj_openclip_vit_big_g_dynamic |

3142.96 |

Not Support |

517.13 |

img_encoder_proj_openclip_vit_h_dynamic |

881.65 |

Not Support |

147.47 |

text_encoder_clip_vit_large |

38.99 |

45.56 |

18.94 |

text_encoder_openclip_vit_h |

119.77 |

Not Support |

48.49 |

Deployment and Source Models

The generative models referenced in this section are primarily intended for benchmarking and capability validation.

Model accuracy and qualitative output quality are not addressed.

MediaTek does not redistribute original training datasets or checkpoint files for third-party or open-source models.

For production deployment, developers must:

Obtain the original models from their official sources,

Follow the applicable licenses and usage terms, and

Perform any required fine-tuning or post-training optimization for your application.