Handling Unsupported OP and Models

When deploying AI models on Genio platforms, you may encounter errors if the model contains operators or data types not supported by the current NeuroPilot (NP) version or hardware generation.

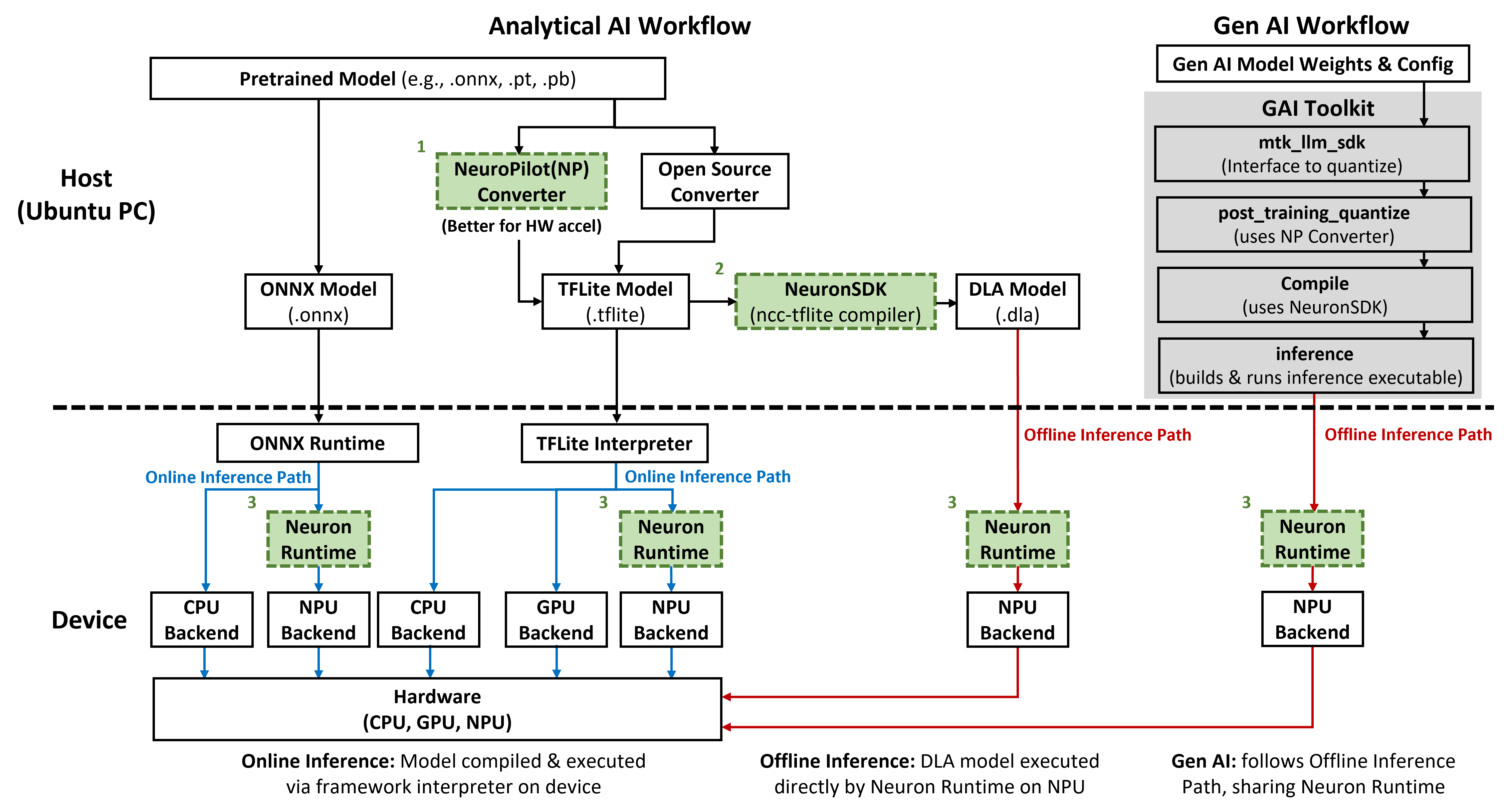

Understanding where the error occurs in the workflow is key to resolving it. The following figure illustrates the main tools in the AI stack. The numbers correspond to the error types described below.

Common Failure Scenarios by Tool

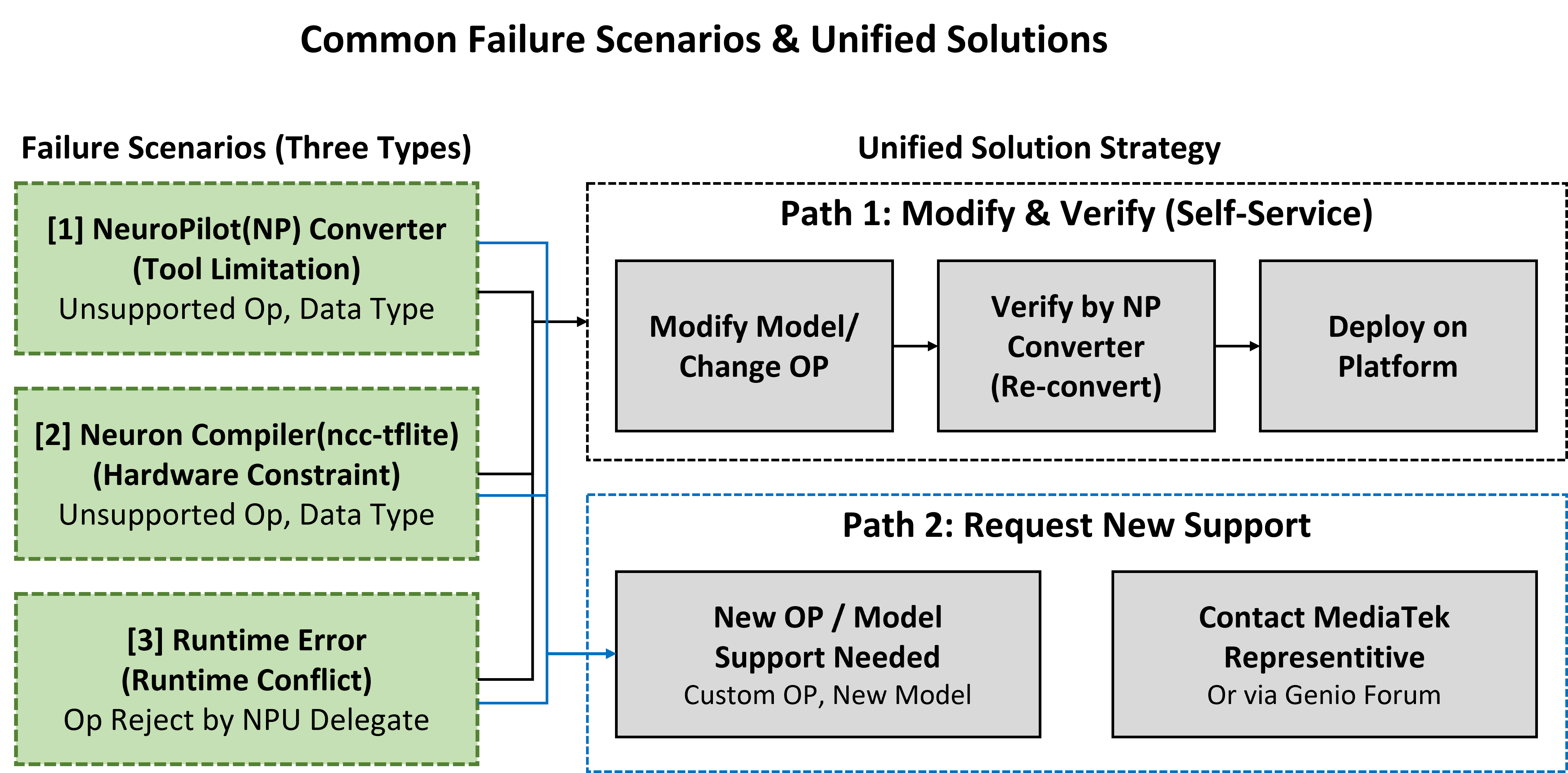

While errors can manifest at different stages of the AI workflow—whether during conversion, compilation, or runtime—the underlying resolution strategies are unified. As shown in the following flowchart, developers typically follow two main paths: either modifying the model to fit existing hardware/tool constraints (Self-Service) or requesting official support for new operators.

The following log snippets illustrate common error messages encountered during these scenarios:

1. NP Converter (Host-side Conversion)

This error (marked as [1] in the figure) occurs during the quantization or conversion phase on the PC. It indicates that the NP Converter does not recognize certain operators from the source framework (e.g., ONNX, TensorFlow).

Example Log:

Converter RuntimeError: Found unsupported operators of (domain, op_type, opset version):

{('', 'Where', 16), ('', 'Reciprocal', 16), ('', 'Sin', 16), ('', 'Erf', 16)}.

Please contact the maintainer for coverage enhancement.

Description: The converter cannot map these specific operations to the TFLite schema or apply the required quantization logic.

2. Neuron SDK / ncc-tflite (Host-side Compilation)

This error (marked as [2] in the figure) occurs when using the ncc-tflite compiler to generate a .dla file for offline inference. It means the operator is valid in TFLite but cannot be accelerated by the target NPU hardware.

Example Log:

ncc-tflite: OP[46]: LOGISTIC

├ MDLA: the rank of shape should be in range [0, 4]

├ EDMA: unsupported operation

ncc-tflite ERROR: Failed to compile the model for NPU acceleration.

Description: The compiler identifies that specific hardware engines (e.g., MDLA or EDMA) cannot handle the operator due to hardware-specific constraints, such as limits on tensor dimensions (rank) or operation logic. Please refer to Example: Pruning the Model for a step-by-step example.

3. Runtime (Device-side Inference)

This error (marked as [3] in the figure) occurs on the Genio device during online inference. The Neuron Delegate checks the model graph and finds operators that must be rejected and sent back to the CPU.

Example Log:

TFLite Runtime ERROR: OP CAST (v1) is not supported

(Output type should be one of kTfLiteFloat32, kTfLiteInt32, kTfLiteUInt8, kTfLiteInt8.)

Description: While the model might load, the specific constraints of the hardware-linked runtime prevent the NPU from executing the operator, potentially causing a fallback to the CPU or an execution failure.

Important

For online inference on Yocto, some models may not run on certain backends due to custom operators generated by the MediaTek converter.

These custom operators (for example, MTK_EXT ops) are not recognized or supported by the standard TensorFlow Lite interpreter, which can lead to incompatibility issues during inference.

In such cases, the corresponding entries in the tables are marked as N/A to indicate unavailable data.

The exact cause of a failure or unsupported configuration may vary per model. For more details, refer to the model‑specific documentation.

Summary Table: Error Handling Strategy

The following table summarizes the recommended actions based on the error type:

Phase |

Tool |

Error Type |

Recommended Action |

|---|---|---|---|

Model Conversion |

NP Converter |

Tool Limitation |

Check if the NP converter in a newer SDK All-In-One Bundle version supports the operator. Otherwise, modify the source model to use alternative operations. |

Model Compilation |

Neuron SDK (ncc-tflite) |

Hardware Constraint |

Modify the model to use operators supported by the specific MDLA version. Refer to the Supported Operations documentation. |

Model Inference |

TFLite / Neuron Runtime |

Runtime/Fallback Conflict |

Enable CPU Fallback if performance allows. For critical paths, modify the model or contact MediaTek for potential updates. |

Note

A model that converts successfully with the NP Converter is generally deployable on the target NPU, but its final acceleration depends on the specific operator coverage of the platform’s hardware.