Operating System

This documentation describes three major operating systems: Android, Yocto, and Ubuntu. Each operating system has a dedicated section that provides detailed setup and usage instructions to help developers bring up AI workloads quickly and efficiently.

Android

Uses a proprietary TFLite variant that MediaTek optimizes for Genio NPUs.

Its behavior differs from the upstream open-source TFLite implementation and is tightly coupled to each Android release.

Yocto

Uses an open-source TFLite build and enables flexible customization of the software stack for embedded devices.

Integrates an open-source ONNX Runtime, allowing developers to reuse standard ONNX models and tools on Genio Yocto platforms.

Ubuntu

Uses the standard open-source TFLite packages, which are suitable for desktop-like development flows and server-class evaluation.

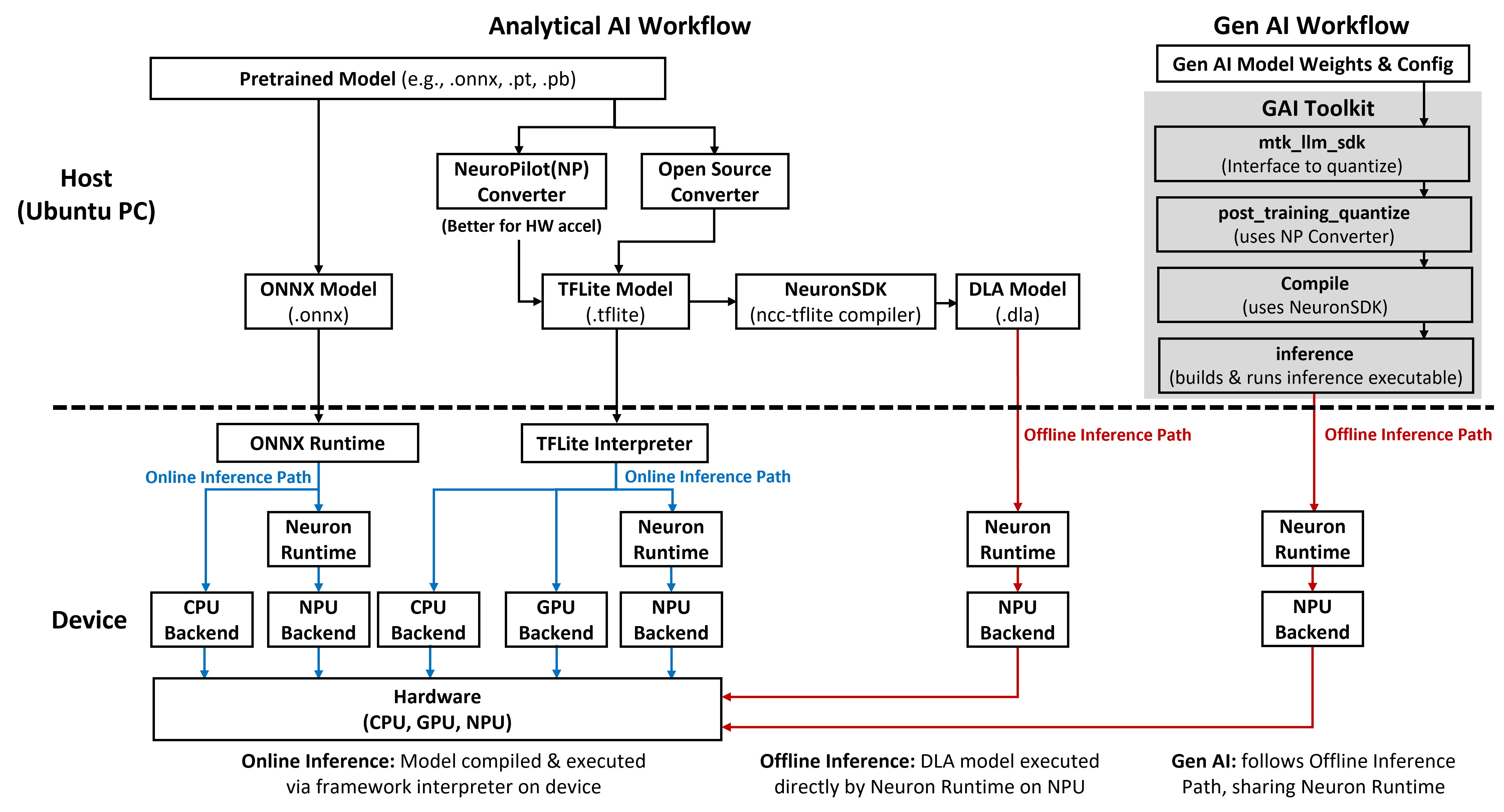

The following software architecture and supporting scope summary provide a quick recap of AI capabilities across Genio platforms. For detailed component descriptions, see Software Architecture. To obtain the required SDKs and host tools, refer to AI Development Resources.

Platform |

OS |

TFLite - Analytical AI (Online) |

TFLite - Analytical AI (Offline) |

TFLite - Generative AI |

ONNX Runtime - Analytical AI |

Genio 520/720 |

Android |

CPU + GPU + NPU |

NPU |

NPU |

CPU + NPU |

Yocto |

CPU + GPU + NPU |

NPU |

X (ETA: 2026/Q2) |

CPU + NPU |

|

Genio 510/700 |

Android |

CPU + GPU + NPU |

NPU |

X |

X |

Yocto |

CPU + GPU + NPU |

NPU |

X |

CPU |

|

Ubuntu |

CPU + GPU + NPU |

NPU |

X |

X |

|

Genio 1200 |

Android |

CPU + GPU + NPU |

NPU |

X |

X |

Yocto |

CPU + GPU + NPU |

NPU |

X |

CPU |

|

Ubuntu |

CPU + GPU + NPU |

NPU |

X |

X |

|

Genio 350 |

Android |

CPU + GPU + NPU |

X |

X |

X |

Yocto |

CPU + GPU |

X |

X |

CPU |

|

Ubuntu |

CPU + GPU |

X |

X |

X |

The NPU operator set is fixed for the lifetime of each SoC. Because these hardware-bound capabilities do not change, the overall support for evolving AI models with new operator(s) depends heavily on CPU fallback for operators that the NPU cannot accelerate. For details on platform-specific NPU versions and their supported operator sets(NP Version), refer to Understanding the Software-Hardware Binding.

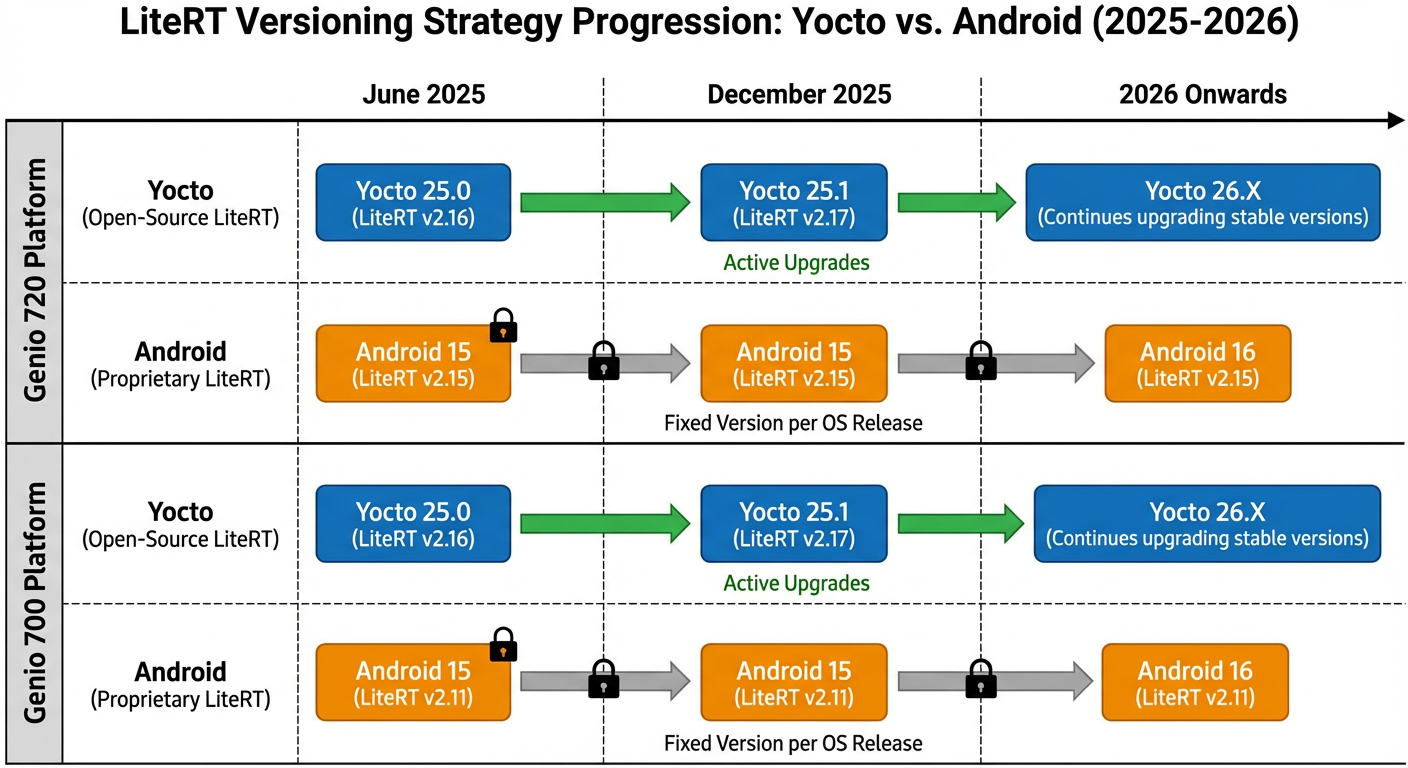

Since the update policies and support for CPU fallback regarding TFLite differ between Android and Yocto, developers should review the following TFLite Versioning Strategy to plan long-term model deployment.

TFLite Versioning Strategy

This versioning timeline uses Genio 720 and Genio 700 as representative examples. MediaTek provides different TFLite update cadences for Android and Yocto to balance stability and the adoption of new AI features.

The following table provides a snapshot of the TFLite versions integrated into various Genio software releases. These versions determine the baseline operator support and the effectiveness of the CPU fallback mechanism for a given platform.

Platform |

OS |

TFLite Version |

|---|---|---|

Genio 520 / 720 |

Android 16 |

2.15 |

Yocto v25.1 |

2.17 |

|

Genio 510 / 700 |

Android 16 |

2.11 |

Yocto v25.1/ Ubuntu |

2.17 |

|

Genio 1200 |

Android |

2.11 |

Yocto v25.1/ Ubuntu |

2.17 |

|

Genio 350 |

Android 16 |

2.08 |

Yocto v25.1 |

2.17 |

On Android, MediaTek ships a proprietary TFLite version that is fixed per release and tightly integrated with the NPU. Its CPU fallback behavior is also fixed, so support for future operators and models does not automatically improve unless a new Android image is provided.

On Yocto, MediaTek continues to upgrade the open-source TFLite version in order to improve model compatibility and CPU fallback support. As a result, newer Yocto releases may run newer models more efficiently, even on the same SoC and Neuron version.

On Ubuntu, the TFLite upgrade cadence generally follows Yocto for a given SoC, so improvements in model compatibility and operator coverage on Yocto typically also apply to Ubuntu.

Handling Unsupported Operators and Models

When deploying models on Genio platforms, you may encounter failures if the model contains operators not supported by the hardware or the NeuroPilot toolchain. These errors can occur during model conversion, compilation, or device-side inference.

For detailed error illustrations and strategies on how to resolve these issues, please refer to: