Run the Demo

Following provides inference pipeline, you can change the configuration based on your needs.

Pipeline property

Property |

Value |

Description |

|---|---|---|

CAMERA |

/dev/videoX |

Camera device node (X may vary on different devices) |

API |

Genio 350-EVK: tflite |

Available backends resources |

Genio 1200-DEMO: tflite, neuronrt |

||

DELEGATE |

Genio 350-EVK: cpu, gpu, nnapi, armnn |

Available hardware resources. This property only take effet when API=’tflite’ |

Genio 1200-DEMO: cpu, gpu, armnn |

||

DELEGATE_OPTION |

backends:CpuAcc,GpuAcc,CpuRef |

Only take effet when DELEGAT=’armnn’, you can change the priority of the configuration |

MODEL_LOCATION |

i.e. ./ssd_mobilenet_v2_coco_quantized.tflite |

Path to the model |

LABELS |

i.e. ./labels_coco.txt |

Path to the inference labels |

Important

On Genio 1200, if the user chooses neuron as API,

there is no need to assign values to DELEGATE, DELEGATE_OPTION.

Because the backend it uses is determined by ncc-tflite when converting the tflite model to the dla model.

For the details of ncc-tflite, please refer to Neuron Compiler section.

The device node which points to

seninfis the camera. In this example, the camera is/dev/video3.

ls -l /sys/class/video4linux/

total 0

...

lrwxrwxrwx 1 root root 0 Sep 20 2020 video3 -> ../../devices/platform/soc/15040000.seninf/video4linux/video3

...

List supported formats

v4l2-ctl -d /dev/video3 --list-formats-ext

ioctl: VIDIOC_ENUM_FMT

Type: Video Capture

[0]: 'YUYV' (YUYV 4:2:2)

Size: Discrete 640x480

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.042s (24.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.067s (15.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Interval: Discrete 0.133s (7.500 fps)

Interval: Discrete 0.200s (5.000 fps)

Size: Discrete 160x90

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.042s (24.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.067s (15.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Interval: Discrete 0.133s (7.500 fps)

Interval: Discrete 0.200s (5.000 fps)

...

[1]: 'MJPG' (Motion-JPEG, compressed)

Size: Discrete 640x480

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.042s (24.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.067s (15.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Interval: Discrete 0.133s (7.500 fps)

Interval: Discrete 0.200s (5.000 fps)

Size: Discrete 160x90

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.042s (24.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.067s (15.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Interval: Discrete 0.133s (7.500 fps)

Interval: Discrete 0.200s (5.000 fps)

...

Important

Remember to adjust GPU frequency and CPU frequency to the max value so that the pipeline can achieve the best performance. Please find in GPU Performance Mode and CPU Frequency Scaling for the details.

Image Classification

You will need a v4l2 compatible camera (i.e. Logitech C922 PRO Stream in this case)

Example pipeline(1) - Inference with

ArmNNdelegate with CPU acceleration,API=tflite

CAMERA='/dev/video3'

API='tflite'

DELEGATE='armnn'

DELEGATE_OPTION='backends:CpuAcc,GpuAcc'

MODEL_LOCATION='mobilenet_v1_1.0_224_quant.tflite'

LABELS='labels.txt'

gst-launch-1.0 \

v4l2src device=$CAMERA ! "image/jpeg, width=1280, height=720,format=MJPG" ! jpegdec ! videoconvert ! tee name=t \

t. ! videoscale ! queue ! net.sink_model \

t. ! queue ! net.sink_bypass \

mobilenetv2 name=net delegate=$DELEGATE delegate-option=$DELEGATE_OPTION model-location=$MODEL_LOCATION api=$API labels="$(cat $LABELS)" \

net.src_bypass ! inferenceoverlay style=0 font-scale=1 thickness=2 ! waylandsink sync=false

Example pipeline(2) - Inference with

NNAPIdelegate,API=tfliteThis is available only on Genio 350-EVK.

There is no need for assigning value to

DELEGATE_OPTIONhere.

CAMERA='/dev/video3'

API='tflite'

DELEGATE='nnapi'

MODEL_LOCATION='mobilenet_v1_1.0_224_quant.tflite'

LABELS='labels.txt'

gst-launch-1.0 \

v4l2src device=$CAMERA ! "image/jpeg, width=1280, height=720,format=MJPG" ! jpegdec ! videoconvert ! tee name=t \

t. ! videoscale ! queue ! net.sink_model \

t. ! queue ! net.sink_bypass \

mobilenetv2 name=net delegate=$DELEGATE model-location=$MODEL_LOCATION api=$API labels="$(cat $LABELS)" \

net.src_bypass ! inferenceoverlay style=0 font-scale=1 thickness=2 ! waylandsink sync=false

Example pipeline(3) - Inference with

API=neuronThis is

NOTavailable on Genio 350-EVK.DLA model could be utilized only when API=neuron.

CAMERA='/dev/video3'

API='neuronrt'

MODEL_LOCATION='mobilenet_v1_1.0_224_quant.dla'

LABELS='labels.txt'

gst-launch-1.0 \

v4l2src device=$CAMERA ! "image/jpeg, width=1280, height=720,format=MJPG" ! jpegdec ! videoconvert ! tee name=t \

t. ! videoscale ! queue ! net.sink_model \

t. ! queue ! net.sink_bypass \

mobilenetv2 name=net model-location=$MODEL_LOCATION api=$API labels="$(cat $LABELS)" \

net.src_bypass ! inferenceoverlay style=0 font-scale=1 thickness=2 ! waylandsink sync=false

Note

The model, mobilenet_v1_1.0_224_quant.dla,

which is provided in /usr/share/gstinference_example/image_classification

was converted with arch=mdla2.0.

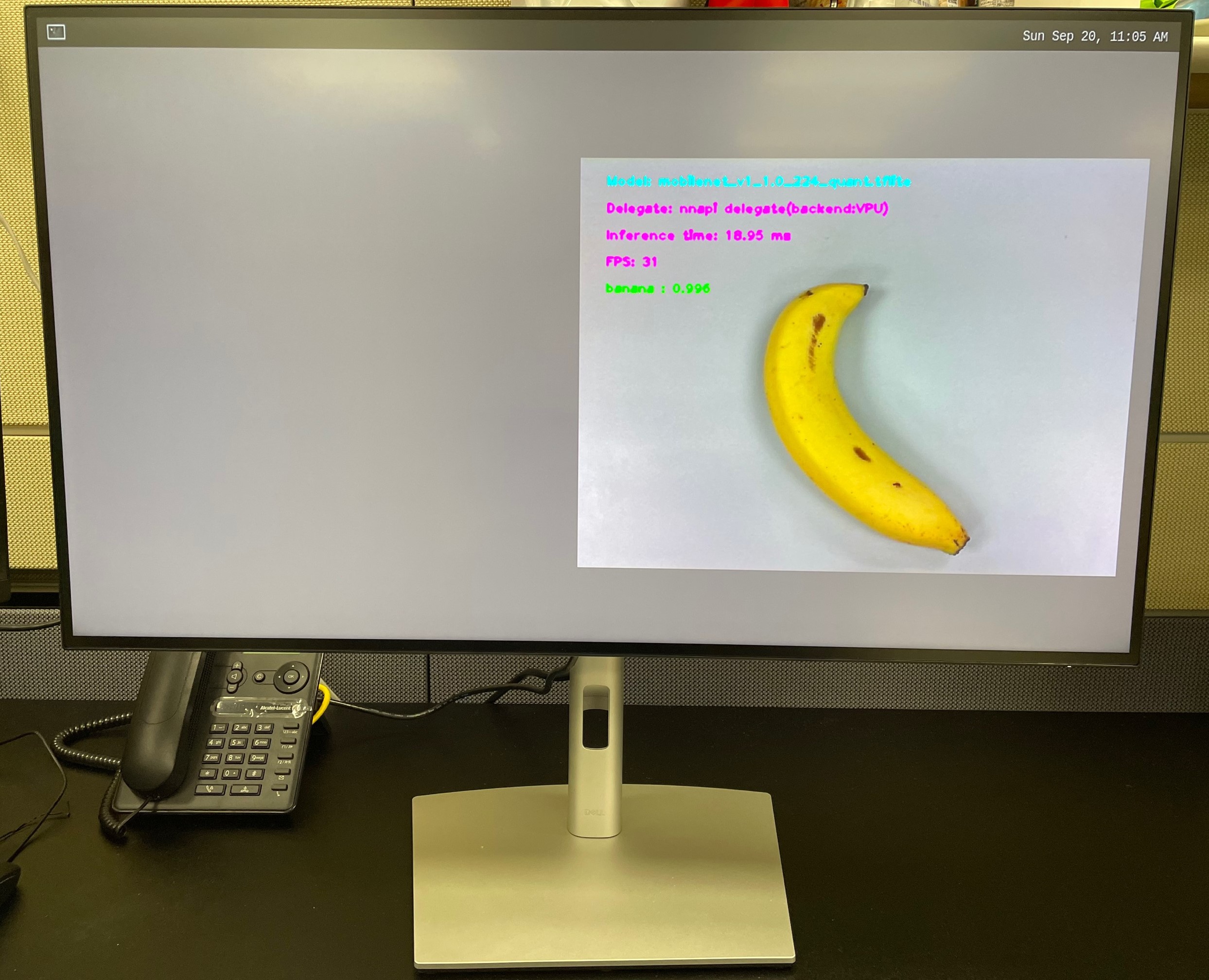

- Reference Output

This is from Example pipeline(2) on Genio 350-EVK

Object Detection

You will need a v4l2 compatible camera (i.e. Logitech C922 PRO Stream in this case)

Example pipeline(1) - Inference with

ArmNNdelegate with CPU acceleration,API=tflite

CAMERA='/dev/video3'

API='tflite'

DELEGATE='armnn'

DELEGATE_OPTION='backends:CpuAcc,GpuAcc'

MODEL_LOCATION='mobilenet_ssd_pascal_quant.tflite'

LABELS='labels_pascal.txt'

gst-launch-1.0 -v \

v4l2src device=$CAMERA ! "image/jpeg, width=1280, height=720,format=MJPG" ! jpegdec ! videoconvert ! tee name=t \

t. ! videoscale ! queue ! net.sink_model \

t. ! queue ! net.sink_bypass \

mobilenetv2ssd name=net delegate=$DELEGATE delegate-option=$DELEGATE_OPTION model-location=$MODEL_LOCATION api=$API labels="$(cat $LABELS)" \

net.src_bypass ! inferenceoverlay style=0 font-scale=1 thickness=2 ! waylandsink sync=false

Example pipeline(2) - Inference with

NNAPIdelegate,API=tfliteThis is available

onlyon Genio 350-EVK.There is no need for assigning value to

DELEGATE_OPTIONhere.

CAMERA='/dev/video3'

API='tflite'

DELEGATE='nnapi'

MODEL_LOCATION='mobilenet_ssd_pascal_quant.tflite'

LABELS='labels_pascal.txt'

gst-launch-1.0 -v \

v4l2src device=$CAMERA ! "image/jpeg, width=1280, height=720,format=MJPG" ! jpegdec ! videoconvert ! tee name=t \

t. ! videoscale ! queue ! net.sink_model \

t. ! queue ! net.sink_bypass \

mobilenetv2ssd name=net delegate=$DELEGATE model-location=$MODEL_LOCATION api=$API labels="$(cat $LABELS)" \

net.src_bypass ! inferenceoverlay style=0 font-scale=1 thickness=2 ! waylandsink sync=false

Example pipeline(3) - Inference with

API=neuronThis is

NOTavailable on Genio 350-EVK.DLA model could be utilized only when API=neuron.

CAMERA='/dev/video3'

API='neuronrt'

MODEL_LOCATION='mobilenet_ssd_pascal_quant.dla'

LABELS='labels_pascal.txt'

gst-launch-1.0 -v \

v4l2src device=$CAMERA ! "image/jpeg, width=1280, height=720,format=MJPG" ! jpegdec ! videoconvert ! tee name=t \

t. ! videoscale ! queue ! net.sink_model \

t. ! queue ! net.sink_bypass \

mobilenetv2ssd name=net model-location=$MODEL_LOCATION api=$API labels="$(cat $LABELS)" \

net.src_bypass ! inferenceoverlay style=0 font-scale=1 thickness=2 ! waylandsink sync=false

Note

The model, mobilenet_ssd_pascal_quant.dla,

which is provided in /usr/share/gstinference_example/object_detection

was converted with arch=mdla2.0.

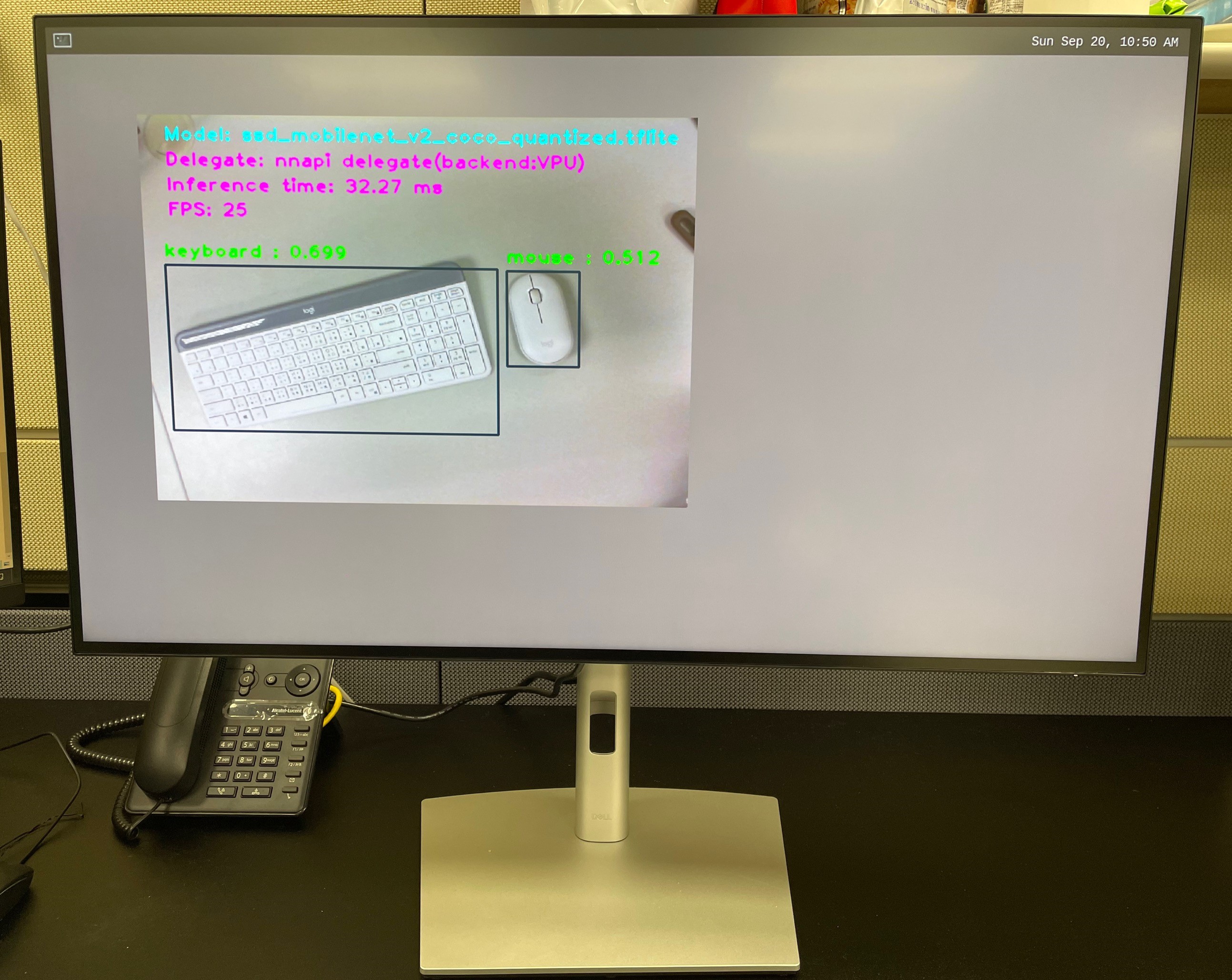

- Reference Output

This is from Example pipeline(1) on Genio 350-EVK